FinServe Big Data Analytics and the Cloud: Strategies and How To’s

The future isn’t what it used to be in the financial services industry. The present isn’t, either. The past, for that matter, wasn’t as tranquil as might be thought. Roughly since the invention of the telegraph 175 years ago, the pressures on investment managers have remained the same: acceleration in the pace of change; the need for rapid analysis of broadening sources of information; globalization of competition; increasing regulatory intervention; and, in competition with passive investment strategies, the imperative for actionable ideas in the pursuit of alpha.

Add in our ongoing low-interest, highly volatile economic environment and you have present-day conditions.

Also add in big data technologies that can ingest, store, retrieve and analyze vast amounts of data and process queries against financial models at incredible speed. Also add in public cloud services, making advanced scale technologies available at (relatively) low cost – taking into account, of course, restrictions on taking sensitive data off-premises.

Technology vendors would have you to believe that big data is the answer to the problem of discovering new investment strategies that beat the index. But the problem with big data-as-panacea is that as technology solves problems it creates new ones. It’s true big data technology can analyze vast datasets faster than ever, but a profounder truth is that the appetite for this capability will never be slaked. Just as the scale of today’s big data capabilities were undreamed of a few years ago, they will be dwarfed by 2025. So the real question becomes how to best leverage big data technology resources, both on- and off-prem, in the most effective and efficient way.

At a webinar this week hosted by Avere Systems (with IDC Financial Insights), Scott Jeschonek, Avere’s director of cloud products, said, “Inevitably, you’ll get faster servers over time, but it seems to me the demand profile will only increase, and the sophistication of the (financial) models is only going to increase, and certainly the data available to process from diverse sources is only going to increase. Not only that but if you’re a large enough firm you probably have multiple sites – Europe, Asia/Pac, looking to consume all that.”

Added William Fearnley, research director, IDC Financial Insights (and a former sell side financial equity analyst), “Return on equity is a continuing focus, and it’s harder as well, especially in a lower interest rate environment, which is becoming more of a challenge for financial firms, banks in particular. Pressed margins are certainly putting more focus on operating costs. And leveraging technology, especially big data and analytics, are being used more to find actionable ideas and to find business operations insights much faster.”

The webinar, “Industry Insights: Efficiency and Scale in Middle Office Analytics for Risk, Compliance and Investment Alpha,” focused on the strain on IT resources caused by growth in the volume and complexity of simulations, and technology strategies for dealing with this challenge. The ultimate need, of course, is identifying investment strategies that beat the index.

“What we’re hearing is that good ideas are getting harder to find,” Fearnley said, pressuring financial managers to do back testing at greater scale, with the deployment of larger data sets extending over longer time horizons that capture more market cycles “to find cross assets and cross market correlations and anomalies for new opportunities.”

Increasing simulation scale is a growing phenomenon called DaaS – Data as a Service. This is the aggregation of multiple sources of public information that has bearing on investment decisions: new government regulations, patent filings, M&A activity, interest rate changes, wars and the threat of wars, trade decisions by the U.S. and foreign countries – “Brexit is a perfect example,” Fearnley said. DaaS can deliver specialized information about companies, about particular investment sectors, about information from the “dark web.”

“What’s happening is that with constant change, you need a global view, it’s presenting opportunities, but it’s building the case for big data and analytics to stay ahead of what’s happening, especially with the constant change in global markets,” Fearnley said. “You can aggregate all that data and take those incremental data points and put them into your investment analytics and into your model as well. DaaS is the new thing that investors have been asking about this year and we expect the trend to continue for the near and long term as well.”

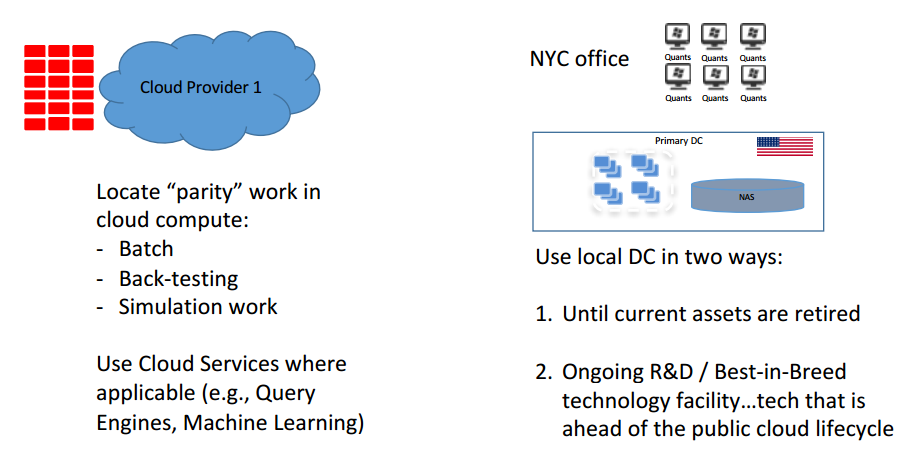

The computing strategy posed by Fearnley and Jeschonek: a hybrid approach that divides workloads and data between on-prem computing resources and the public cloud. While proprietary and transactional data must stay on-premises, non-sensitive data (such as market data, data from news feeds and information from DaaS providers) can be used in the public cloud.

“Accessing capability and capacity has changed,” Fearnley said. “What we’re seeing is a lot of firms combining internal and external capacity. Certainly secure data centers are more expensive, and cloud services are more attractive, and what we’re seeing is a lot of firms looking to combine the two.”

He discussed a typical situation in which the onsite data center capacity available to investment managers is at or near capacity, due to the ongoing pressure to maximize on-premises resources. But as massive new sets of non-sensitive data become available for analysis, public cloud resources are ideal for handle burst capacity – analytics workloads that are beyond the capacity of the onsite data center.

Jeschonek presented a “batch and burst” cloud strategy in which some workloads for which immediate answers are not required can by run overnight, in batch mode, and others, that require specialized computing capabilities involving large datasets, can be burst out to the cloud.

“It might seem counterintuitive to run batch in the cloud,” he said, “but if you think about it, there are good reasons you might want to consider it. The compute section of the cloud is in some ways a no brainer for working on batch type applications that are of a high core count.”

The advantages of the cloud include reducing the strain on internal computing resources, cost savings and the ability to quickly scale up and scale down via the cloud, a.k.a., “capacity on demand.”

“In a volatile interest rate environment, a lot more attention is being paid to paying for (compute) capacity as it’s used,” Fearnley said. “But then firms also need to balance the combination of internal as well as external resources. What’s interesting is as you look at what some of the cloud providers are providing, from a capacity perspective you can be very specific about adding incremental cores and storage capacity. We expect that to continue, and the fact that you can micro-manage those on demand resources is increasingly attractive to investment management firms.”

Jeschonek outlined a cloud scenario in which computationally intensive simulations involving read-heavy workloads use roughly 40,000 cores accessing 500 GB of data.

“When it’s done you just want to destroy the whole thing so it all goes away,” he said. “That’s tailor-made for the cloud. And there are people doing it for real in production today, that’s not a hypothetical.”

The cloud’s ability to quickly deliver powerful compute resources also means faster simulation runs and fewer simulation iterations, without waiting for availability from the internal data center.

Investment managers want to “assemble the data, do their test or do their simulation, perhaps a Monte Carlo simulation, in the fewest number of iterations possible,” Fearnley said. “The obvious benefits: you can find answers faster; it also confirms strategies faster. But it can also improve the efficiency of an analyst and portfolio manager if they (simulations) fail faster. So if they have an idea, they can test it quickly and in a few iterations they can say it’s a good idea that needs more attention and needs more work, or they can say it’s a bad idea that doesn’t warrant more time, and move on to something else.”

A hybrid cloud approach also helps transition organizations from exclusive use of internal data center resources. Jeschonek said there can be reluctance to move to the cloud because there’s “the problem of I have to continue to do business over time. So how do I maintain business state while I consider expansion into the cloud? So the hybrid cloud approach allows you to maintain steady state in your current data centers. You can decide if you’re going to expand them or freeze them, but you can still fully consume them, while at the same time leveraging one or two cloud providers to offset some of the workloads. And then the jobs can be submitted based on the best case scenario.”

When organizations begin utilizing public cloud resources, there can be something of a transformative effect for the overall IT strategy. The ultimate effect of incorporating external, on-demand resources for batch and burst workloads, Jeschonek said, can be to place internal resources into more of a strategic role.

“Consider using the public cloud in the immediate term to take some of your parity workloads that are predictable,” Jeschonek said. “You use the cl providers’ computational environments with an eye to the cost side of your problem because you know you’ll be able to spawn enough nodes or servers to offset the performance side of the function.

“That allows you to take the load off your on-premises data center,” he said, “and suddenly you start thinking of your on-premises data center as your weapon, something you can look at and build out high-test, high performance, best-of-breed solutions. Those solutions might all ultimately, some day, move to the cloud, but suddenly you are doing R&D for your core business, as opposed to just filling infrastructure based on demand. It really increases (the role) of the IT organization and you’re using the cloud.”