Nvidia Adds ‘Parker’ CPU to ‘Brain of Autonomous Vehicles’

Nvidia’s autonomous vehicle strategy took a step forward this week with the announcement of a new mobile CPU, called “Parker,” offered to automakers as a single unit or integrated into the company’s DRIVE PX 2 platform, announced at CES earlier this year. Along with two Parker chips, DRIVE PX 2 will include two Pascal GPUs and, taken together, will deliver 24 trillion operations per second to run complex deep learning-based inference algorithms, according to Nvidia.

Parker was announced at the Hot Chips conference in Cupertino, CA, with product details released in a blog post by Danny Shapiro, Nvidia’s senior director of automotive.

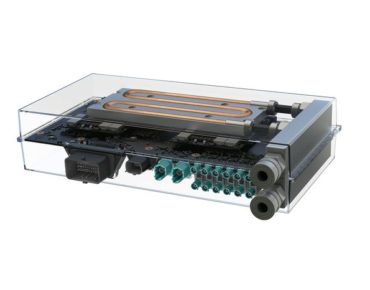

Leaving aside the vehicles themselves, the market for the technology that drives autonomous cars and trucks could exceed $40 billion by 2025, according to Boston Consulting Group. Nvidia said more than 80 car makers, tier 1 suppliers and university research centers use the DRIVE PX 2 system (pictured above), including Ford, Audi, Daimler and Volvo, which plans to road test DRIVE PX 2 systems in XC90 SUVs next year.

Shapiro told EnterpriseTech in an email interview that after the DRIVE PX 2 launch that customers “unanimously” called for more compute power within the platform.

“We believe with the introduction of Parker in DRIVE PX 2, that this is more than a ‘stepping stone’ and DRIVE PX 2…can serve as the brain of autonomous vehicles,” said Shapiro. “Such systems deliver the supercomputer level of performance that self-driving cars need to safely navigate through all kinds of driving environments.”

Shapiro said Nvidia’s autonomous vehicle strategy is to develop an “open supercomputing platform for the car” that runs deep neural networks, a centralized computer that can take inputs from cameras, radar, lidar and ultrasonic sensors. The ultimate goal, he said, is to develop DRIVE PX 2 into a system “designed for artificial intelligence and deep learning, as opposed to just traditional computer vision.”

Nvidia claims that compared with other mobile processors, Parker delivers 50 to 100 percent higher multi-core CPU performance, which the company attributes to an architecture consisting of two 64-bit Nvidia Denver CPU cores (Denver 2.0) paired with four 64-bit ARM Cortex A57 CPUs working “together in a fully coherent heterogeneous multi-processor configuration.”

Parker is architected to support both decode and encode of video streams up to 4K resolution at 60 frames per second. “This will enable automakers to use higher resolution in-vehicle cameras for accurate object detection, and 4K display panels to enhance in-vehicle entertainment experiences,” Nvidia said.

The Denver 2.0 CPU is a seven-way superscalar processor supporting the ARM v8 instruction set and implements a code optimization algorithm and additional low-power retention states for energy efficiency, Nvidia said. In addition, a new 256-core Pascal GPU in Parker runs deep learning inference algorithms, “and it offers the raw graphics performance and features to power multiple high-resolution displays, such as cockpit instrument displays and in-vehicle infotainment panels.”

Parker includes a dual-CAN (controller area network) interface to connect to the electronic control units in cars, and Gigabit Ethernet to transport audio and video streams. Compliance with ISO 26262 (safety standard for electronic systems used in cars) is achieved through features implemented in hardware, such as a dedicated dual-lockstep processor for fault detection and processing.

Shapiro said fault detection “refers to faults and errors that are highly unlikely, but could potentially occur due to alpha or neutrons (cosmic rays) striking the ASIC that can cause (depending on where they strike) failures that can translate into hazards.

“Where possible (depending on the type of fault that occurs), we correct those faults,” Shapiro said. “But that’s not sufficient. You have to also reliably report the faults (especially the ones that are not corrected) such that the external supervisor (typically an ASIL D micro-controller) knows that there is a fault and enables the system to fail-safe or fail-operational (depending on the safety goal and safety solution end to end).”