Cramming 220 PB In A Modular Datacenter

Don't try to lift this by yourself. In the search for ever-cheaper and ever-denser storage, the customers that drive the Data Center Solutions unit at Dell have asked the company to forge a storage-heavy dual-node server that could cram nearly a quarter of an exabyte of data into a single modular datacenter container.

The DCS unit doesn't do announcements as such, but it does lift the veil on its custom machines from time to time to give a hint at the kinds of hardware engineering it is doing and the sort of products that could be commercialized in the event that demand for these bespoke products goes beyond the top 20 or 30 hyperscale datacenter operators. In a blog post, Tracy Davis, general manager of the DCS unit within Dell's PowerEdge server organization, lifted the veil on the DCS XA90, one of the densest storage servers available today.

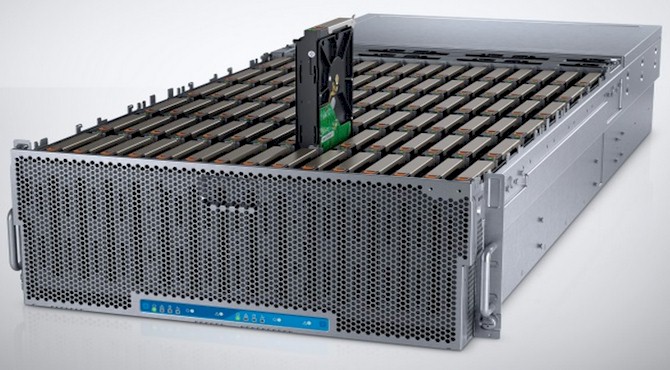

While the DCS AX90 is not the first storage server that crams a lot of inexpensive and fat 3.5-inch SATA drives into a chassis with a server node in it, this device can lay some claims to density. The 4U chassis holds a total of 90 such drives in a standard-width 19-inch form factor for the chassis, and does so by mounting the drives vertically into a midplane at the bottom of the enclosure. Most fat storage servers have banks of drives that are hot-pluggable in the front of the enclosure and some jam another row into the back of the chassis with compute stuck in the middle. The DCS XA90 puts not one server node, but two, at the back of the chassis, which means as many as 45 drives can be allocated to each node in the enclosure. Those compute nodes are based on Intel's latest "Haswell" Xeon E5-2600 v3 processors, which were announced in early September. Dell is supporting the 10-core and 12-core variants of the Haswell Xeon E5s in this storage server, which gives you 40 or 48 cores and 90 drives into a single chassis. The server nodes can be equipped with 16 GB or 32 GB DDR4 memory sticks running at 2.13 GHz, which means Dell is not skimping here, and they have a combined four PCI-Express 3.0 slots across the nodes. The server nodes can have their own 200 GB eMLC SSD or 1 TB SATA drive spinning at 7.2K RPM.

The DCS XA90 storage server uses 6 TB or 8 TB SATA drives that also spin at 7.2K RPM, the latter which are now becoming available from Seagate Technology. The machine can be equipped with PMC Sierra 8805 or 81605 RAID controllers and have optional 10 Gb/sec and 40 Gb/sec ConnectX-3 Pro dual-port Ethernet controllers from Mellanox Technologies to link to the outside world. With 8 TB drives, the DCS XA90 storage server would hold 720 TB of storage. It is not clear if a rack can be packed with ten of these units and still hold up under the weight of the drives, but Davis did say that a single containerized modular data center from Dell could house up to 220 PB of raw disk capacity using the DCS XA90s. That works out to 300 of these storage servers and a total of 27,000 disk drives.

No word on what this monster storage array costs, but given that it is a DCS product, you would have to buy a lot of them to be able to engage with Dell. It is very likely, in fact, that this product traces its lineage to one of the big clouds or hyperscale datacenter operators. The Open Vault storage array designed by Facebook for its Prineville, Oregon, datacenter is a much more complex device, with two trays with 15 3.5-inch SATA drives being connected by a hinge and jammed into a 2U chassis that is a little bit wider than a standard 19-inch rack. Dell has 50 percent greater storage density in its device than the Facebook design, which has far more moving parts and no internal compute.

Getting Hyper – Converged, That Is

On the other end of the storage spectrum, Dell has rolled out its promised PowerEdge XC line of hyperconverged clusters running the Nutanix Operating System and its Nutanix Distributed File System, which implements a virtual SAN across those clusters. Like Nutanix, Dell is supporting the use of Nutanix atop either VMware's ESXi hypervisor or Microsoft's Hyper-V on the cluster nodes. (Support for the KVM hypervisor is in the works from Nutanix.)

Dell is offering different hardware configurations from the appliances sold by Nutanix, but the idea is the same. Server nodes are configured with flash and disk storage and virtualized server workloads are run on the same nodes as the virtual SAN software, which is what gives this approach its moniker of "hyperconverged." (In the server lingo, converged meant bringing server and networking hardware into the same physical devices, and hyperconverged means bringing in virtualized storage in addition to virtualized compute and running across clusters of machines.)

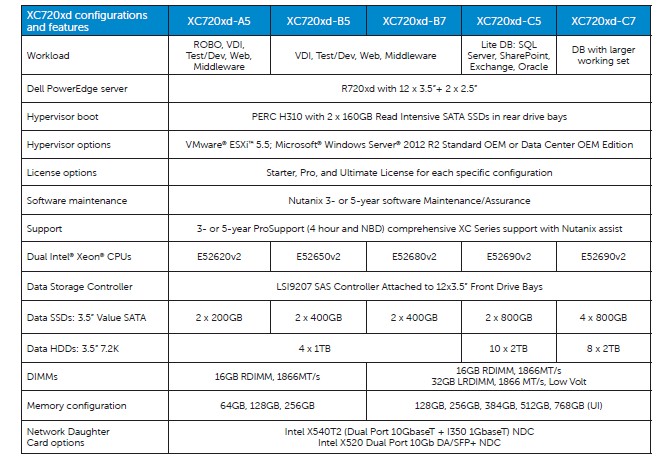

The appliances from Nutanix are based on Supermicro systems, and Dell said back in June when it announced the deal with Nutanix that it would have two variants of its own Nutanix appliances, one based on regular PowerEdge servers and another based on its semi-custom PowerEdge C6220 machines designed for hyperscale customers. The machines announced this week are based on its plain vanilla PowerEdge 720xd servers, and here are the configurations:

Travis Vigil, executive director of product management for storage at Dell, tells EnterpriseTech that the PowerEdge XC machines include the Nutanix software and three years of support in their price. The PowerEdge XC720xd-A5 model costs $46,000 per node while the much more capacious XC-720xd-C7 machine costs $190,000 per node. Dell will start shipping them on November 11. The big benefit as far as enterprise customers are concerned is that if they already have Dell iron, they can get the Nutanix virtual storage on Dell iron, including Dell sales and support. Dell also can provide customers with a complete hyperconverged setup for VDI workloads, including endpoints and management software for those endpoints thanks to its acquisition of Wyse Technology a few years back.

Dell does not expect for customers to switch immediately from actual SANs to virtual ones based on either the Nutanix software or the EVO:RAIL or EVO:RACK hyperconverged designs architected by VMware using its VSAN software. "This is a paradigm shift and it is going to take time," says Vigil. "We believe it will be focused on greenfield opportunities and we do not believe that people will rip out the storage they have and rearchitect."