Dell Pushes Up Server Density With FX

Since founding its Data Center Solutions unit back in May 2006, which began with a custom server drawn on the back of a bar napkin at the Driskell Hotel in Austin, Texas, Dell has learned a lot about the varied needs of enterprise, hyperscale, and supercomputing customers when it comes to systems and storage. The launch of the PowerEdge FX line brings together all of the engineering to take the lessons from hyperscale and apply them to enterprise and HPC customers who are looking for density but who also need more variability in their machines than the typical hyperscale shop has.

All system buyers want to have their hardware configured to precisely run their software stacks as efficiently as possible. It makes no sense to overprovision machines with distributed workloads because extra processing power, memory, or storage is wasted capital. At hyperscale datacenters, the hardware is literally designed and tuned along with software, and the companies have so few workloads that they only need a few different makes and models of machines to support their workloads. This is less true with supercomputing centers, which tend to have a larger number of workloads, and it is certainly not true of large enterprises, which typically have thousands of workloads and therefore need a wider variety of iron to support them. Trying to engineer a set of systems that can span all of these diverse needs is tricky, and that is precisely what the PowerEdge FX line is trying to do.

"Rack and blade servers are used in the enterprise, and they have their strengths and weaknesses," explains Brian Payne, vice president of platform marketing, who gave EnterpriseTech a preview of the machines from Dell's New York offices. While rack servers are still the preferred form factor for hyperscale operators and are also the dominant form factor for the enterprise, they do not have density and cable aggregation, and they are not as compact or power-efficient as the same amount of compute crammed into a blade chassis. While blade servers have these features, the integrated networking and management in the blades as well as redundant features make them an inappropriate choice for both hyperscale and HPC uses who are extremely sensitive to overall cost, and moreover, you can't start out small with a blade server and match the low per-node cost of a rack server. "And with hyperscale workloads, the ability to support lots of disks inside the server is critical."

With modern distributed workloads, such as Hadoop analytics, NoSQL data stores, virtual server stacks and clouds, and even hyperconverged platforms that turn clusters into virtual storage area networks as well as compute farms, the need to have local storage on the node that is both fast and compact is increasingly important. The FX architecture that Dell is rolling out blends the best bits of rack and blade servers and also borrows from some of those custom system designs from the DCS unit to create a modular system that can meet that wide variety of needs.

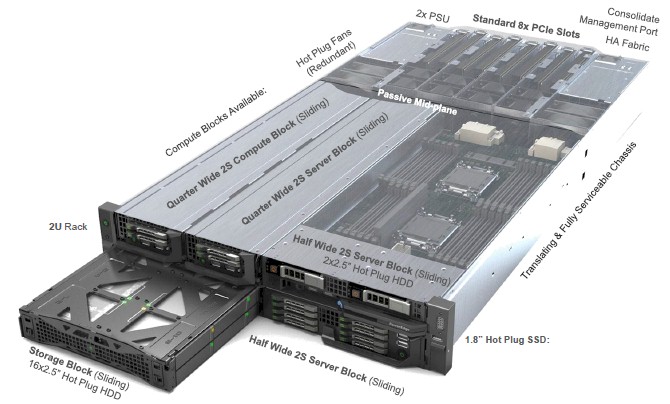

The PowerEdge FX2 system is the first in the line, and it starts with a 2U rack enclosure that is the standard form factor for most machines in the enterprise. The FX2 chassis supports multiple processor and storage sleds, which slide into the chassis as well as switch modules for those who want to have integrated switching link a blade and passthrough units if they don't like a hyperscale setup. Depending on the scale of the nodes themselves in terms of how many processors they can pack into a single system image and what kind of processors are used, the FX2 chassis can have two, four, or eight sleds and from two to sixteen nodes in that 2U enclosure. This is considerably more density than Dell is able to offer with its PowerEdge M1000e series of blade servers. (We will get into that in a moment.)

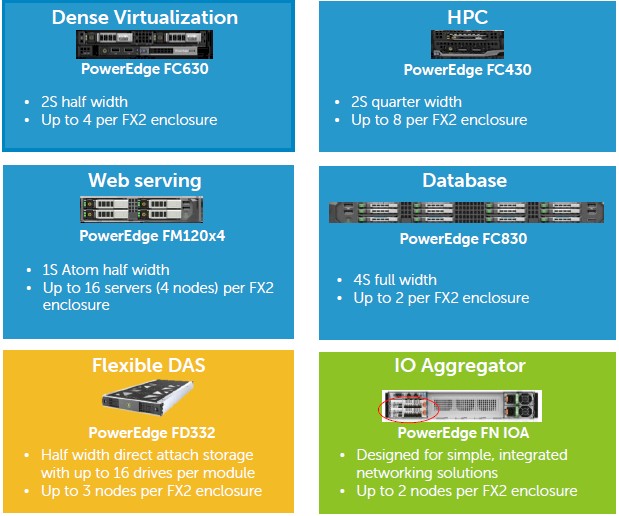

Dell has four different server sleds and a DAS storage module in the initial PowerEdge FX2 configurations, and these will meet the needs of a lot of different workloads. Significantly, Dell is adopting 1.8-inch SSDs as an option for local storage in some of the compute nodes, which allows them to have a certain amount of local storage that is fast and may, in some cases, obviate the need for the DAS trays in the first place. The IO Aggregator, a flexible network I/O that gets rid of multiple network interfaces, mezzanine cards, and switches in the PowerEdge M series blade chassis has been converted into a sled as well as can be used to aggregate and virtualize networking between the server nodes in the PowerEdge FX2 chassis and the outside world.

The FX2 chassis comes with 1,100 watt or 1,600 watt power supplied and they can be configured in 1+1 redundant or non-redundant supplies. The enclosure has room for two passthrough modules, which can support either 1 Gb/sec or 10 Gb/sec Ethernet. The chassis has eight PCI-Express 3.0 expansion slots, which are shared by the nodes using an integrated PCI-Express switch. (There are two versions of the FX2 enclosure: the plain vanilla FX2 does not have PCI-Express expansion slots while the FX2s (little "s" added) enclosure does have these slots. There are eight hot-swappable fans in the back of the enclosure that pull air through the system to keep it cool.

The system has a Chassis Management Controller with a graphical user interface that manages all of the components in the system from a single web console and that snaps into Dell's existing OpenManage tools. Each node in the enclosure has an iDRAC8 "lifecycle controller" rather than having one for all nodes tucked into the back. The server nodes plug into a midplane for electric power and network and external peripheral connectivity rather than trying to cram all of this onto each node. This way, you can have high-speed peripherals even with very skinny, quarter-width server trays. The compute density is separated from the I/O density, which is what everyone is talking about when they say we need to disaggregate the components of the server to improve it.

Starting in December, Dell will start shipping two of the four PowerEdge FX2 compute nodes. The first one is the PowerEdge FC630. This half-width node comes with two of Intel's latest "Haswell" Xeon E5-2600 v3 processors, and interestingly, Dell will support the high-end 16-core and 18-core variants of these processors in the FC630 nodes but oddly enough it is not supporting the 14-core variants. (You can have Haswell processors with 4, 6, 8, 10, or 12 cores in addition to those two top-end parts. The FC630 has 24 memory slots across those two sockets, and supports DDR4 memory sticks with up to 2.13 GHz speeds and with 4 GB. 8 GB, 16 GB, or 32 GB capacities; that means memory tops out at 768 GB per node. The I/O mezzanine card on the FC630 can link out to two of those PCI-Express x8 expansion slots in the back of the chassis, and in the front of the server tray there is room for two 2.5-inch drives (these can be SATA or SAS disk drives or Express Flash NVMe, SATA, or SAS SSDs) or eight 1.8-inch SSDs that come in a 200 GB capacity.

The other server tray that is shipping for the FX2 enclosure is the PowerEdge FM120x4, which is also a half-width sled on which has four of Intel's Avoton" C2000 series processors on the sled. Dell is supporting variants of Avoton with two, four, or eight cores, with eight being the maximum available, with 1 MB of L2 cache shared for every pair of cores. Each Avoton chip has its own two dedicated DDR3 memory slots, and each node can have one 2.5-inch drives allocated to it or two of the new 1.8-inch SSDs. The chassis only needs an 1,100 watt power supply to power up four of these FM120x4 modules, and the Avoton nodes do not have any PCI-Express mezzanine cards but do support 1 GB/sec and 10 Gb/sec networking out of the chassis through the pass-through modules in the FX2 enclosure.

Microsoft Windows Server 2008 R2, Windows Server 2012, and Windows Server 2012 R2 are supported on these two nodes, as is the Hyper-V hypervisor. Red Hat Enterprise Linux and SUSE Linux Enterprise Server are also supported; VMware's ESXi hypervisor and the KVM hypervisor are noticeably absent on the list of supported systems software, but that does not mean they will not function.

Dell is not providing detailed configuration and pricing information for the FX2 machines yet, but says pricing for a sled starts at $1,699. This is not a very useful number, and perhaps pricing information will be divulged when the machines start shipping next month.

The other sleds for the FX2 enclosure will be available sometime during the first half of 2015, and this includes the PowerEdge FC430 and FC830 nodes.

The PowerEdge FC430 is aimed squarely at traditional HPC workloads where compute density is paramount as well as at web serving, virtualization, dedicated hosting, and other jobs that will require less memory and peripheral expansion. The FC430 is a quarter-width node that has two Xeon E5-2600 v3 processors on it. That means you can get up to eight server trays into a single 2U FX2 enclosure. Dell is not being precise about the processor configurations yet, but it says it will be able to support up to the 14-core Haswell Xeon E5 processors and up to six DDR4 memory sticks per socket. That works out to 224 cores and 48 memory sticks per FX2 enclosure. That works out to a total of 4,480 cores and, using relatively cheap 8 GB memory, 7.5 TB of main memory per rack. For real HPC customers, it would be useful to have an InfiniBand networking option, and presumably one is in the works. The FC430 supports the same 1 Gb/sec and 10 Gb/sec mezzanine cards, linking out to the I/O modules in the chassis or the IO Aggregator.

By the way, the FC430 has the same memory stick density (48 sticks per enclosure) as the FM120x4 using the Atom processors, and actually has a 40 percent higher core density if you compare the eight-core Avoton to the 14-core Haswell. And the FC430 has faster DDR4 memory, higher clock speeds, and fatter sticks, too. It will also presumably burn a lot more energy, given the relatively low power of the Avoton chip. The important thing is, customers have options.

The big bad compute node for the FX2 is the PowerEdge FC830, which is a full-width node that eats up half of the height in the chassis. (Conceptually, it is like a 1U, four-socket rack server.) Dell is not divulging what processor it is using in the FC830, but it is almost certainly not a Xeon E7 processor but rather an as-yet-unannounced Haswell Xeon E5-4600 v3 chip, presumably due early next year if Intel history is any guide. (January is a good guess for the chip.)

The FC830 is being positioned for high-end database and data store workloads and super-dense virtualization, where the memory footprint is important, and also for certain kinds of back-office systems and even HPC workloads where, again, the memory footprint matters as much or more than the compute density. The ideal pairing in some cases will be two of the FD332 direct-attached disk enclosures with a single FC830 server tray, which will fill the FX2 enclosure. The FC830 can have eight 2.5-inch drives (disk or SSD) or sixteen 1.8-inch drives, and the two FD332 units. The FD332 is a half-width FX2 tray that plugs four rows of 2.5-inch drives into the inside edge bays of the unit, for a total of sixteen drives. SAS and SATA drives are both supported. The Atom-based FM120x4 nodes cannot be linked to the FD332 drive tray, but the three other Xeon nodes can be. An FX server can link to multiple FD332 units, and an FD332 unit can be split into two and divvy up its capacity across two server trays. The trays are aimed at disk-intensive workloads like Hadoop analytics or virtual SANs.

While all of this new iron is interesting, what matters is how it is different from using existing machinery. Let's take Hadoop as an example, then database processing, and then web serving.

In the past, Dell would have recommended that customers use its PowerEdge R710 or R720 rack servers, which have two Xeon E5 processors and sixteen 2.5-inch drives, for Hadoop cluster nodes. With these 2U machines, you can get 20 nodes in a rack. Shift to the FX2 setup, which pairs four of the quarter-width FC430 compute nodes with two FD332 storage nodes, and you can get a total of 80 compute nodes in the rack. In that above example, using 14-core Haswell Xeon E5s in both types of machines, the FX2 setup has twice the disk density, at 640 drives per rack, and four times the compute density, at 2,240 cores per rack. And here is the important bit: Dell is promising that customers will pay "zero premium for density."

The database example is an online retailer that has to refresh its database servers to run the new Oracle 12c relational database. The can go with a bunch of PowerEdge R620 rack servers using the Haswell Xeon E5 chips, which can process around 160,000 new orders per minute at a cost of 39 cents per new order per minute. Or they can use the new FC630 server nodes and marry it to Dell's Fluid Cache for SAN caching software and the in-node SSDs. This allows for the performance to be boosted such that an FX2 setup can process 1 million new orders per minute (a factor of 6.3X more work) at 26 cents per new order per minute, a 40 percent reduction in cost. (Dell is not supplying the underlying configurations for these comparisons, which would have been useful. We have asked to see them.)

For the web serving example, Dell pit rival Hewlett-Packard's ProLiant DL320p minimalist rack servers at a web hosting company against the FX2 machines using the Avoton nodes. The ProLiants were configured with "Sandy Bridge" Xeon E5 processors, 40 to a rack, and to refresh the machines to the Haswell generation would cost $1,060 per node. (This seems pretty cheap to us.) But by moving to the FX2s with the Avoton processors, the web hoster could cram 320 web servers into a rack instead of 40 and do so for $955 per node. That price difference of $105 per node may not seem like a lot, unless you are in the hosting business living on razor thin margins.