Private Clone S3 Object Storage On A Massive Scale

In the hyperscale world, snippets of data pile up like mountains and a traditional file system that allows data to be updated is not only inappropriate, but too slow to serve up that data. This is precisely why object storage was invented in the first place somewhere between two and three decades ago, depending on how you want to draw the historical lines. Object storage is now a normal part of the IT environment, and being compatible with Amazon Web Services' Simple Storage Service (S3) object storage is a requirement for a lot of enterprise customers.

What enterprise shops would like is the ability to use S3 object storage for the applications they put in the public cloud and also be able to have S3 object storage run locally in their datacenters for the times when the application and the data has to reside on private infrastructure for security, data governance, or response time reasons. Or when the costs of storing S3 data locally drop below that of using the actual S3. The Web Object Scaler (WOS) object storage from DataDirect Networks now supports the S3 protocol. And that means companies can get AWS-like functionality on local object storage and, in some cases, save a bundle of dough.

"The market is constantly shifting right now with object storage," explains Lance Broell, product marketing manager for the WOS products at DDN. "But right now there are a number of accounts that started with Amazon, built an infrastructure at Amazon, and then found at that the data transfer and the storage costs were more expensive than doing it themselves. If you take one tray's worth of WOS capacity, which is 360 TB, and compare it to Amazon S3 at a reasonable data protection level, you are at $10,000 to $12,000 per month. And that is a boatload of money if you start multiplying that out to large scale. We have customers that started small on Amazon and are expanding, and they have been clamoring for a way to bring the data back in house and have access to it without having to change code."

The latencies are also a lot lower on a local implementation of S3 running on the WOS gateways compared to the actual S3 service. Depending on where you are located in relation to an Amazon datacenter, what your connectivity is to Amazon, and what your internal networks look like, S3 accesses take on the order of 40 milliseconds to 100 milliseconds on the S3 service, but a local WOS S3 gateway can serve up an object in something between 10 milliseconds to 20 milliseconds.

Broell tells EnterpriseTech that DDN does not disclose pricing, but adds that WOS object storage, including the WOS software and disk appliances that it runs on, has about an 18 month to 24 month payback for heavy users of the AWS S3 service. WOS arrays are around 30 to 40 percent cheaper than traditional scale-out storage and that the big reduction in the cost is that WOS object storage takes a lot less management.

A lot of the savings come from the architectural differences between traditional disk arrays and their file systems, which is why the hyperscale datacenter operators embraced the object storage concept when they were running up against the limits of that traditional storage. File-based storage can scale to billions of files and has locking mechanisms and a file system hierarchy that allows for data to be amended, but it is tough to scale and, importantly, total cost of ownership scales up exponentially as the file storage grows. This is unacceptable to hyperscale datacenter operators, who need things to cost less as they scale up. And hence the advent of object storage, which can store trillions of objects in a distributed system. Object storage gives a unique identifier to each object and the data in that object is immutable by necessity and therefore requires no locking mechanisms to prevent it from being altered. There is one pool of storage for all objects and the metadata layer to keep track of where everything is located in the pool is all stored in a single namespace to prevent a bottleneck in scaling.

So how far can WOS scale? A WOS 360 object storage cluster can have as many as 256 nodes, with a total of 7,680 disk drives and house 1 trillion objects. The WOS object storage has a 32-bit cluster identifier, and that means up to 32 clusters can be lashed together into a single WOS namespace, spanning 8,192 nodes, 245,768 disk drives, and 32 trillion objects. The WOS namespace spans to 1.47 exabytes in capacity at the moment if you assume a 6 TB disk drive. It can do about 8 million object reads and 2 million object writes fully loaded. Object sizes range from 512 bytes to 5 TB.

Running the S3 protocol, the WOS S3 gateway is not yet this scalable. With S3, DDN can link up between five and ten gateways together and deliver around 800 MB/sec of bandwidth per gateway and more than 500 million objects per gateway, or around 5 billion S3 objects per namespace across those ten gateways. According to Broell, it will double the WOS S3 object count again in 2015. "And this is not an architectural challenge, but rather a testing challenge," he adds.

To give you a send of scale, it took AWS six years from 2006 through 2012 to hit 1 trillion objects in the actual S3 service, and in less than a year it doubled to 2 trillion objects and a peak of 1.1 million requests per second in the S3 service in April 2013. Amazon has not announced the current size of the object store, but at current growth rates, it should be north of 5 trillion or 6 trillion and maybe as high as 7 trillion or 8 trillion. The interesting thing is that DDN has architected something that can, in theory, contain enough objects to be at least four and maybe five times as scalable as S3. (We have little idea how far the actual Amazon S3 service can scale, or how it is architected and federated.)

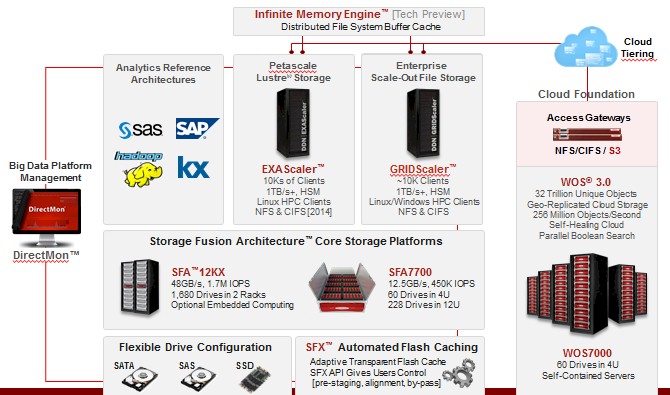

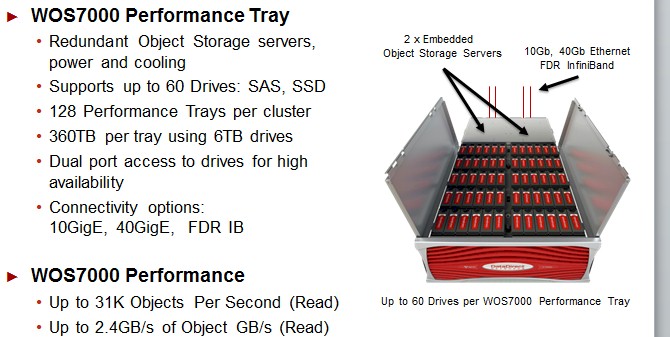

The S3 compatibility is offered through the WOS gateway, which runs on four 1U server nodes in a base configuration. (There is a fifth node that runs the WOS management software tools.) From there, you can scale up WOS storage servers and WOS gateway servers independently of each other, so you can boost S3 object processing capacity or storage capacity independently of each other. DDN sells the WOS7000 appliance, which crams 60 disk drives in a 4U space, as a storage appliance and has multiple sources for the iron behind the gateways and the storage arrays, according to Broell.

The WOS Gateway may store objects, but it can serve them up to file systems. The gateway supports the NFS and CIFS interfaces that are popular with Unix, Linux, and Windows systems as well as interfaces that are compatible with IBM's General Parallel File System (GPFS). The Gateway can also run an open source plug-in that converts the S3 protocol to the Swift protocol designed for and commonly used with OpenStack. Early next year, DDN plans to bring a native Swift interface to the gateway, which will presumably have higher performance than the open source plug in for S3.