HP ARMs Moonshot Servers For Datacenters

After what seems like an eternal wait, customers who want to give 64-bit ARM processors a whirl can finally get systems to play with. Hewlett-Packard is showing off Moonshot hyperscale systems using the "Storm" X-Gene1 processors from Applied Micro at the ARM TechCon this week in Santa Clara, and it is also beginning shipments to customers.

HP, the dominant maker of systems in the world, has spent several years prototyping various ARM server processors in its Redstone developmental and Moonshot production hyperscale systems. Starting today, HP is outfitting the Moonshot systems with two different ARM chips that will give X86 processors a run for the money for specific kinds of workloads in the datacenter, particularly jobs where memory and I/O are more important than raw compute. HP is the first of the major server makers to get a production machine in the field based on Applied Micro's X-Gene family of 64-bit ARM server chips, and it is also putting its weight behind a hybrid chip from Texas Instruments that mashes up 32-bit ARM cores and digital signal processors (DSPs).

Applied Micro has been working with a bunch of tier one server makers as well as some upstarts to get them to adopt the X-Gene1 processor, the first in a family of processors based on the ARMv8 specification that the company plans to bring to market. Applied Micro, a maker of networking chips as well as embedded PowerPC processors, has bet a pretty big part of the farm on its expansion into system-on-chip processors aimed at server workloads. The company divulged its ambitious plans back in October 2011, becoming the first full licensee of the ARMv8 architecture from ARM Holdings, the RISC chip designer that controls the intellectual property behind the processors used in so many smartphones, tablets, and other personal computing devices these days.

The ecosystem of hardware and software to support ARM processors in systems has taken longer than many expected and certainly longer than many had wanted, and many potential customers have been waiting for 64-bit ARM chips because their codes have already been created for 64-bit X86, Power, or MIPS architectures and going back to 32-bits is not in the cards. There are some workloads where 32-bit processing is just fine, particularly when you are just manipulating streaming data of some sort and not doing calculations on it or are not in need of a large memory footprint. Applied Micro had no intention of delivering a 32-bit ARM server chip, but Marvell, Texas Instruments, and the defunct Calxeda (which ran out of money developing 32-bit and 40-bit ARM server processors and a sophisticated integrated L2 switching fabric before it could get to 64-bits), and others have 32-bit ARM server SoCs that they contend are still viable.

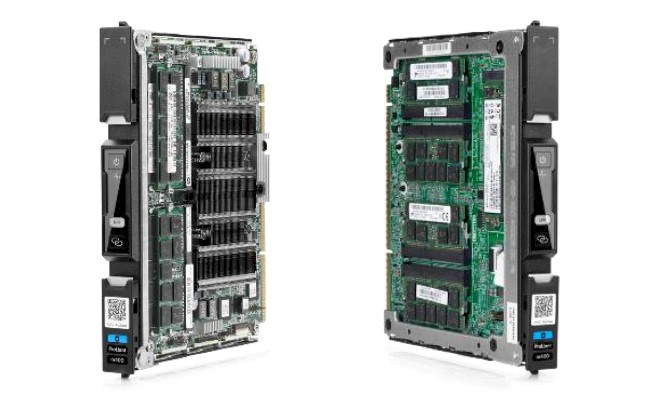

With the debut of the ProLiant m400 X-Gene1 server cartridge for the Moonshot hyperscale machines, both Applied Micro and HP can claim to be on the leading edge and customers can finally get their hands on systems to put them through the paces to see where they might slide into the datacenter. As EnterpriseTech reported back in August, Applied Micro has two more 64-bit ARM chips in the works. The current X-Gene1 chip has up to eight cores running at 2.4 GHz, four DDR3 memory channels, and two 10 Gb/sec Ethernet NICs embedded on the SoC. With the "Shadowcat" X-Gene2 chip, which is sampling now and expected to ramp next year, Applied Micro will shrink the chip etching processes to 28 nanometers and offer between 8 and 16 cores on a die running at between 2.4 GHz and 2.8 GHz while also adding support for the RDMA over Converged Ethernet (RoCE) protocol. Next year, Applied Micro will start sampling its "Skylark" X-Gene3 chip, which will be made using 16 nanometer FinFET transistors and which will have more cores, a 3 GHz baseline clock speed, and a second-generation of RoCE to match the new specification that was announced recently. We bring this up merely to point out that Applied Micro has a relatively aggressive roadmap. The hard bit might be convincing customers to buy the X-Gene1 when X-Gene2 is around the corner sometime next year and will have roughly twice the oomph.

For those customers who want a brawnier ARM SoC and 64-bit memory addressing, then the ProLiant m400 cartridge based on the X-Gene1 chip fits the bill. The X-Gene1 chip has four core pairs on it, with each pair sharing a 256 KB L1 cache memory and all eight cores sharing an 8 MB L3 cache. The X-Gene1 cores employ a superscalar, out-of-order execution microarchitecture that is common to high-end server RISC processors. With four memory channels, the X-Gene1 can support up to 64 GB of main memory, which is twice as much as can be attached to a ProLiant m300 Moonshot server cartridge based on the "Avoton" C2000 processor from Intel. This is the obvious Moonshot node to compare the X-Gene1 node to.

The ProLiant m300 cartridge, which started shipping last December, also has eight cores running at 2.4 GHz, in this case they are "Silverton" Atom cores from Intel. But the Avoton chip only has two memory channels and four memory slots and therefore tops out at 32 GB of DDR3 main memory per SoC using 8 GB sticks. So the X-Gene1 has the memory capacity and bandwidth advantage at the moment. And also network bandwidth. The Atom-based m300 cartridge has two Ethernet ports running at 1 Gb/sec or 2.5 Gb/sec, which is fine for some workloads but not for most in the datacenter these days, where 10 Gb/sec is the de facto baseline and for hyperscale customers 25 Gb/sec will quickly become the norm as a link to server nodes. The I/O fabric on the X-Gene1 chip can drive four Ethernet ports running at 10 Gb/sec, even though HP is only using two on its ProLiant m400 cartridge. Specifically, HP is embedding a dual-port ConnectX-3 adapter from Mellanox Technologies onto the m400 server node. The ProLiant m400 cartridge has one X-Gene1 processor per cartridge, just like the m300 has only one Avoton C2000 processor on it.

"What's really nice about the X-Gene part is that the cores scale well with the memory bandwidth that is available," Gerald Kleyn, director of hyperscale server hardware research and development at HP, explains to EnterpriseTech. "As you go look at a lot of the bigger, badder cores, they can consume a lot more memory bandwidth very quickly. So if you have an application that you want to have scale linearly as you add cores, and it is dependent on memory bandwidth, we have seen that do very well."

As a case in point, Intel has moved to 18 cores with the current "Haswell" Xeon E6-2600 v3 processors, which is a 50 percent ramp in core count over the "Ivy Bridge" v2 predecessors. But on the STREAM memory bandwidth benchmark, the performance going from a 12-core Ivy Bridge Xeon to an 18-core Haswell Xeon only increased by 14 percent – and that includes a shift to faster DDR4 memory with the Haswell box compared to slower (and hotter) DDR3 memory with the Ivy Bridge box. Now, STREAM is only a benchmark and is not everything. But the point is, with linear memory bandwidth scaling, it would have been ideal if the core count and the memory bandwidth could have both scaled up by 50 percent. But there are tradeoffs that any chip maker has to make to boost the core count on a die.

The perfect workload for the ProLiant m400 node is something like Memcached in-memory web application caching, where the workload is both memory bound and I/O bound and is not compute bound, says Kleyn. Any hyperscale datacenter operator that has complete control and intimate knowledge of their software stack is interested in X-Gene1 ARM chips and its successors (and Facebook has been messing around with prototypes for its "Group Hug" microserver since late 2013). In one case, a customer developing applications for ARM-based consumer devices was able to see a 10X reduction in the time it took to test applications by moving from racks of tablets and phones in their test environments to racks of X-Gene1 processors instead. Canonical's Ubuntu Server 14.04 LTS version of Linux is supported on the ProLiant m400 nodes, and so is the Nginx web server and IBM's Informix relational database management system in addition to the open source Memcached memory caching program. These are all tuned up, and other pieces of system software and applications will no doubt follow as ARM servers ramp.

The "Gemini" Moonshot 1500 enclosure can have up to 45 server cartridges and two integrated switches in its 4.3U of space. The components snap in from the top of the chassis rather than in the front. The backplane in the Moonshot chassis allows for the server cartridges to be linked to each other in a 2D torus topology without requiring an internal switch, and this backplane interconnect is also used to link server cartridges to storage cartridges, which come with disk or flash drives. The interconnect 7.2 Tb/sec of bandwidth and the 2D torus is used to link three nodes in a north-south configuration (like an n-tier application) or fifteen nodes in an east-west configuration (like a more traditional parallel cluster or cloud).

The cartridge has its own local storage, which is based on M.2 flash modules, which is used to store local operating systems, system and application software, and local data for the node. You link out over Ethernet switches for external storage using iSCSI protocols. The M.2 flash modules come in 120 GB, 240 GB, or 480 GB capacities for the ProLiant m400 node and these are not included in the base price and neither is the maximum memory capacity, either.

With eight cores and 64 GB per cartridge using 8 GB sticks, a chassis of the ProLiant m400 nodes will have 360 cores and 2.8 TB of main memory. You put ten of these enclosures in a rack and you have 3,600 cores and 28 TB of memory to hook together to run a distributed application. The m400 node burns about 55 watts with all of its components on the board, so a rack is in the neighborhood of 25 kilowatts across 450 nodes.

HP sells the Moonshot machines with a starter kit with 15 server nodes, one switch, the enclosure and a certain number of power supplies. With the ProLiant m400 setup, the enclosures come with three power supplies and use a 10 Gb/sec switch, , that HP has been promising to deliver since the first Moonshot systems were announced back in April 2013. With one switch and three power supplies, the ProLiant m400 starter kit for the Moonshot costs $58,477. HP is not providing per-node pricing at this time, but we are looking for more granular pricing data.

The ARM-DSP Hybrid

HP was showing off some ARM-based nodes at the Supercomputing 2013 event in Denver last November, and EnterpriseTech got an exclusive on them at the time. Ed Turkel, group manager of worldwide HPC business development at HP, actually talked a bit about the ProLiant m800 cartridge, which was in preview back then and which is shipping to customers starting this week as well.

The KeyStone-II chips are based on a Cortex-A15 core from ARM Holdings, which can have two or four cores running at up to 1.4 GHz. The on-chip TeraNet coherency network created by TI links the ARM cores to as many as eight TMS320C66x digital signal processors that are on the same die. The eight DSPs run at up to 1.2 GHz and provide about 1 teraflops at single-precision and 384 gigaflops at double precision at that speed. The KeyStone-II SoC also has security and packet processing accelerators and an integrated Ethernet switch.

In the ProLiant m800, HP is gearing down the ARM cores and DSP units. The ARM cores run at 1 GHz and the DSPs also run at 1 GHz. There are four SoCs on a single cartridge, with each having 8 GB of DDR3 main memory running at 1.6 GHz; an optional 32 GB or 64 GB M.2 flash storage module is available per SoC using the KeyStone-II. The floating point performance is not, strictly speaking, the only metric that matters with this ARM-DSP hybrid, although being able to cram something on the order of 1.5 petaflops at single precision and 576 teraflops at double precision floating point into a rack is a pretty big deal. But the KeyStone-II SoC can cram 720 ARM cores, 1,440 DSP cores, and up to 11.5 TB of flash storage into a single chassis. Multiply by ten for a rack.

A base Moonshot system with fifteen ProLiant m800 nodes with 32 GB M.2 flash storage each, a chassis, one switch, and four power supplies costs $81,651. Like the Applied Micro X-Gene1 cartridge, the KeyStone-II cartridge runs Canonical's Ubuntu Server 14.04 LTS variant of Linux.

As we reported in HPCwire last week, the PayPal online payment unit of eBay is using these ProLiant m800 ARM-DSP hybrids for complex event processing workloads. And one of the key benefits is being able to use the Serial RapidIO (SRIO) networking with the integrated 2D torus to get low latency between the nodes to quickly process data flowing into the PayPal System Intelligence application, which correlates information from system logs, social media, and other sources to quickly find out where PayPal's systems are not behaving and therefore affecting application performance and user perception of the services it offers.

The University of Utah is using the ARM-based Moonshot machines in its cloud research, and Sandia National Laboratories is also deploying Moonshots based on ARM as well. Both organizations are expected to detail their use cases later this week at ARM TechCon. Kleyn was not at liberty to give any details about these installations or indeed any of the other pilots and deployments he said were going on among enterprise and hyperscale customers. Last fall, Turkel said that financial services firms had lined up early to get evaluation units of Moonshot machines, and these are exactly the same customers who will no doubt want to give ARM server chips a whirl. The ProLiant m800s could be suitable for seismic analysis in the energy sector or for all kinds of digital signal processing in the intelligence arena, too.