Hurdles To Enterprise Adoption Of Hyperscale Technology

The recent price war among Amazon, Google, and Microsoft suggests that more of the cost advantages of hyperscale datacenters are being passed on to the customer. So what does this mean for enterprise IT over the coming years?

To date, with the exception of a few SaaS offerings, enterprises have been relatively slow to adopt cloud computing for core workloads. Some think that a fundamental cost advantage in hyperscale infrastructure will drive a massive migration of enterprise workloads, while others are adamant that enterprises will adopt elements of hyperscale architecture that drive costs lower. Will a change in the cost structure really drive widespread adoption?

Over the past decade, hyperscale datacenters have emerged as the fastest growing component of global IT infrastructure. Much of it was initially to support entirely new offerings in search and social media. The large scale and low margins of these products required organizations to fundamentally change how they think of IT infrastructure. The entire infrastructure was abstracted into software running on low-cost X86 servers and standard storage drives. These vendors developed much of the software for managing the infrastructure and delivering services internally. Large scale datacenters were often located near low-cost power sources and/or designed to use minimal air conditioning. The infrastructure and applications look fundamentally different than traditional enterprise datacenters or co-located facilities – and usually provide infrastructure at a much lower cost.

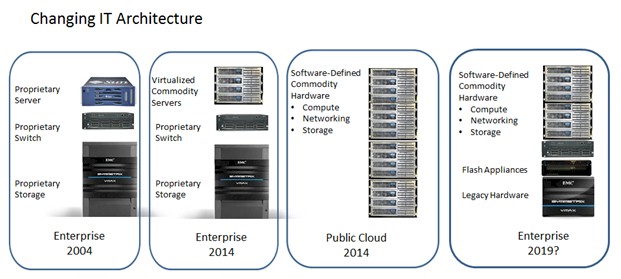

Enterprise datacenter architecture has been evolving more slowly. The biggest change for the enterprise has been at the server layer, where virtualization has enabled the abstraction of server functionality into software. This has provided dramatic improvements in economics by increasing hardware utilization, reducing provisioning time, and improving flexibility. However, enterprise storage is still largely characterized by proprietary hardware islands managed by dedicated storage admins.

The central question over the next several years is whether enterprise datacenters will move to fully software-defined architectures (and hence replicate some of the cost advantage of the hyperscale public cloud), or much more of the enterprise IT workload will migrate onto public cloud infrastructure. The answer is most likely “yes” to both, but let’s look at some of the other factors that will help determine the relative success of each option.

Barriers To Hyperscale Adoption

Despite the success of several SaaS categories and a large community of cloud-focused startups, overall enterprise migration onto public cloud platforms has been proceeding at a relatively slow pace. In fact, a survey of cloud adoption by Bain & Company cited a mere 11 percent of companies that are early adopters and rapidly moving workloads into the cloud. More recently, a 2014 survey by RightScale suggested that only 18 percent of enterprises were “cloud focused,” while more than half were still in the evaluation and planning stages.

Key barriers to more rapid adoption include:

- Risk management: Security, compliance and governance

Studies suggest that risk management concerns show a sharp decrease as organizations gain experience with cloud platforms. Cloud providers have added additional identity and Access control features to help ease those fears; however, there are inherent risks in cloud platforms. The Manhattan Federal District Court’s recent decision that Microsoft must hand over customer's email located in datacenters located on foreign soil being just one example.

- Technical limitations: Scalability and performance of compute and storage resources

Technical limitations are being whittled away as well, but cloud datacenters are still much better at delivering large, low-cost object storage solutions than high-performance, block solutions. Most cloud datacenters were designed for partitioned workloads and may not be the best choice for scale up, high-availability, mission-critical workloads. And, quite simply, no cloud can defeat the laws of physics – workloads requiring high-bandwidth, low-latency data access will always be better served when the resources are proximate to the user.

- Organizational barriers: Migration to the cloud requires new skill sets

Organizational barriers to change need to be addressed whenever there is a significant change in architecture and process, and the cloud transition is no exception. Many organizations have developed “horizontal” IT organizations over the past two decades – applications, networking, and storage all developed specialist admins. However, both cloud and on-premise “converged” architectures organizations require much more “vertical” organizations as the entire stack is managed as a single resource focused on supporting the application. This should reduce the net staff requirement and also requires completely new skillsets. Leading an organization through this kind of disruption effectively is not a simple task.

Will Hyperscale Cloud Data Centers Prevail?

As enterprises overcome obstacles to hyperscale migration, they will benefit from faster scalability, geographic reach, cost savings and business continuity. But how compelling are these advantages, and how sustainable are they?

As is typically the case, it will depend on the customer segment and use case. For example, scalability and geographic reach will be much more important to a small SaaS company looking to expand rapidly than a long-established company that is not changing its geographic footprint. Similarly, concerns around cloud security, privacy, and latency will be greater for a financial services provider than a food processor.

Two primary things to consider:

- Economics: Fundamentally, economics often drive market share over time so other key questions will be how much of the hyperscale cost advantage will be passed on to customers, and how much can software-defined datacenter architecture close the gap between hyperscale and enterprise datacenters? Hyperscale datacenters can achieve economics through high-volume purchasing that may be hard to reproduce, but software-defined networking and storage can significantly improve the utilization and flexibility of on-premise resources and allow users to move away from dependence on high-margin, proprietary appliances and utilize industry-standard hardware. Initiatives like the Open Compute Project should encourage this, and as software-defined storage tools become more widely adopted, the elimination of today’s siloed storage environment will enable much more efficient management of enterprise IT resources.

- Energy: A significant datacenter operating cost, energy is another key concern. Enterprise datacenter facilities may never match the advantage gained by locating hyperscale datacenters adjacent to remote, hydro-generating facilities; however, new business models are evolving that may substantially improve energy-related capital and operating cost. In fact, Johnson Controls is building out a business of managing critical infrastructure, making it easier to bring best practices even into smaller datacenters. Electrical utilities are beginning to look at co-located datacenters that take advantage of the inherent availability in base load power plants, eliminate transmission cost, and potentially integrate geo-distributed IT workloads into high-value demand response systems used to manage the electrical grid.

Software-defined datacenter platforms increasingly provide the capability to span workloads across geographic locations, while using common management tools in all locations. This facilitates hybrid cloud/on-premise deployments that more closely calibrate the needs of each workload with the capabilities of the available platforms. A more fluid architecture with unified management will provide the IT team with the ability to move workloads among resources based on requirements such as privacy, low latency, capacity for one-time analytic tasks, high-bandwidth storage or low-cost storage. Metrics around utilization, performance, and marginal capacity costs will have to inform these decisions.

The fluid architecture enabled by software-defined datacenters and hybrid cloud/on-premise infrastructure also redefines disaster recovery (DR) architecture. Surprisingly, the majority of enterprises either has no DR strategy and process, or don’t test their DR process regularly. Traditional, active-passive DR architectures use IT resources inefficiently and introduce inherent risk in that one only knows if it will work right after it is tested or right when it is actually needed. Emerging geo-distributed or hybrid deployments enabled by software-defined, active-active platforms on the other hand use resources efficiently and eliminate the failover concept entirely – and with it the question of whether it will work. For many cases, a large public cloud may represent an ideal platform for DR.

The Enterprise Evolution

Even though hyperscale public datacenters have compelling advantages today, enterprises have been slow to adopt these platforms broadly because of risks around security and privacy, technical or performance limitations, and internal organizational structure and incentives. Many of these obstacles are being reduced or eliminated as cloud providers add features and enterprises add cloud-focused skills and processes in their organizations so enterprise deployment on hyperscale public clouds will continue to increase over the next few years.

However, it is unlikely that enterprises will become completely dependent on public clouds. The analogy to power generation, which moved from distributed, local production to more centralized generation by specialized utilities a century ago, is not going to play out to the same extent. Instead, we will see continued growth in hyperscale datacenters. Certain segments of enterprise workloads will find a home there, and distributed enterprise and co-located datacenter centers will start to resemble hyperscale datacenters from an architectural perspective. Software-defined datacenter tools will give enterprise cloud customers the freedom to match workloads with internal and public platforms and limit the likelihood of being confined to yet another proprietary silo.

Bill Stevenson is executive chairman and an early investor in Sanbolic and has been working actively with the company since 2002. Bill started his career at Bain & Company, and then helped found and build Corporate Decisions (CDI), a growth strategy firm serving Fortune 1000 companies and private equity firms. Major clients included companies in the electronics, aerospace, financial services, and retail industries. CDI was sold to Marsh & McLennan in 1997, where Bill lead the Business Design Innovation consulting team for three years and taught executive education classes at the Wharton School. He has a degree in economics from Princeton University, and did graduate work at the University of Cologne, Germany with a Fulbright grant.