IEEE Gets Behind 25G Ethernet Effort

After several days of presentations and a unanimous vote of 61 to zero in favor, the members of the IEEE have decided to back an effort to develop 25 Gb/sec Ethernet standards for servers and switching. The formation of the study group is the first step in a process that will almost certainly culminate in a new and more affordable set of networking that is suitable for clusters supporting clouds and distributed workloads alike.

Impatient with the downvote on the formation of the 25G study group at an IEEE meeting in China back in March, two of cloud computing giants – Google and Microsoft –teamed up with two switch chip providers – Broadcom and Mellanox Technologies – and one upstart switch maker – Arista Networks – to create a specification for 25 Gb/sec Ethernet speeds that were different from those officially sanctioned by the IEEE but based on existing 100 Gb/sec standards. The five companies set up the 25 Gigabit Ethernet Consortium in early July and very clearly wanted to put some pressure on the IEEE to see the reasoning behind the effort and to quickly get behind it.

This is precisely what has happened. Mark Nowell, senior director of engineering at Cisco Systems, led the call for interest for the 25G and now that is has been approved by IEEE at a much more broadly attended meeting in San Diego last week, Nowell be the study group chair. (You can see the call for interest at the IEEE at this link, and can see the proposal that Nowell made along with executives from Microsoft, Intel, Dell, and Broadcom to make the case for 25 Gb/sec switching at this link.)

The news of the formation of the IEEE study group comes just as network market watcher Dell'Oro Group has rejiggered its forecasts to show that it believes that by 2018 the 25 Gb/sec speeds will be the second most popular port speeds after 10 Gb/sec by 2018; the 10 Gb/sec ports will account for more than half of the port count and revenues at that time. This, among other things, reflects the divergent networking needs of enterprises, supercomputing centers, and hyperscale datacenter operators.

Sameh Boujelbene, director at Dell'Oro Group, explained it this way: “25 Gb/sec drives a better cost curve than 40 Gb/sec as most components that support 100 Gb/sec can be easily split to 25 Gb/sec. Material Capex and Opex savings can also be achieved, especially by mega cloud providers as 25 Gb/sec cabling infrastructure can likely support many generations of servers and network equipment. We believe that two of the large cloud providers, such as Google and Microsoft alone, could drive a 25 Gb/sec ecosystem."

This is exactly what Anshul Sadana, senior vice president of customer engineering at Arista Networks, explained to EnterpriseTech when the 25G consortium was launched three weeks ago. An ASIC doing 40 Gb/sec Ethernet has four lanes running at 10 Gb/sec, and that makes for higher heat and limited port density compared to a switch that had ports running at 25 Gb/sec, with a single lane coming out of each port. Just like 40 Gb/sec switches ganged up four 10 Gb/sec lanes to make a port, current 100 Gb/sec switches make a port by ganging up four 25 Gb/sec lanes. Compared to a 40 Gb/sec switch, Sadana estimated that moving to single-lane 25 Gb/sec links would allow the switch to draw somewhere between on half and one quarter of the power while delivering somewhere between 2X and 4X the port density. Such a 25G Ethernet switch might provide 2.5X the bandwidth of a 10 Gb/sec switch at 1.5X the cost with half the power draw and a higher port density.

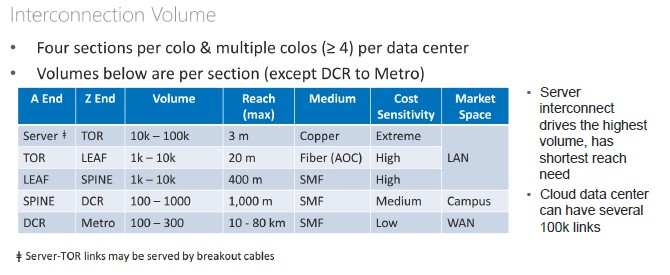

To make the case for 25G Ethernet, Nowell relied on some figures supplied by Microsoft for its internal network, which were actually used to make the case for 400 Gb/sec Ethernet according to Nowell but which also make the case for 25 Gb/sec switching. Take a look:

This table above shows the scale of a Microsoft datacenter in each section. There are usually four sections per co-location facility and usually four or more co-locations per datacenter. So multiply these numbers by 16X to get a sense of the scale of a datacenter operated by Microsoft. As you can see, a datacenter can have several hundred thousand links between servers and switches and the various tiers in the network, and importantly, there is what Microsoft calls "extreme" price sensitivity at the top-of-rack switch layer. In many cases, 10 Gb/sec speeds are not enough bandwidth coming out of a box and 40 Gb/sec switches cost too much, run too hot, and don't have enough ports to service a full rack. The 25 Gb/sec speed maximizes both usable ports (128 for a single ASIC) and bandwidth (3.2 Tb/sec). An ASIC for a 40 Gb/sec switch that has four lanes running at 10 Gb/sec to make those ports can only have 32 usable ports with a total bandwidth of 1.28 Tb/sec. If you boost the lane speed to 20 Gb/sec and do two lanes per port, that allows you to double up to 64 ports running at 40 Gb/sec, delivering only 2.56 Tb/sec of aggregate bandwidth. The proposed 25 Gb/sec speed yields the bandwidth of a 100 Gb/sec switch and the port count of a 10 Gb/sec switch.

Here's the case that Dell made for the 25 Gb/sec option, showing what it would take to wire up 100,000 servers in a datacenter using 40 Gb/sec and 25 Gb/sec Ethernet switching:

100K SERVERS

There are a number of things to note in this chart above. The first thing is that server densities are higher than 40 machines per rack these days, and you can often double or triple that depending on the hyperscale machine design. So being able to drive up the port count on the switch is important. In many cases, 10 Gb/sec ports are not faster enough because the workloads running on each node can saturate a 10 Gb/sec port – this is particularly true of machines with lots of cores and memory running server virtualization hypervisors and workloads that eat a lot of network bandwidth.

In the scenario above, a 40 Gb/sec switch using 10 Gb/sec lanes can only support 28 servers with four 100 Gb/sec uplinks, and it is only using 47.5 percent of the 3.2 Tb/sec of available bandwidth on the switch ASIC. (There is a reasonable and fairly apples-to-apples oversubscription ratio on all of these scenarios.) If you switch to 20 Gb/sec lanes, you can boost the downlink port count to 48 and double the 100 Gb/sec uplink ports to eight, and drive the ASIC bandwidth utilization rate up to 85 percent. But look at that 25 Gb/sec switch. You can get 96 downlink ports with eight 100 Gb/sec uplinks and fully utilize the 3.2 Tb/sec of bandwidth in the switch. The upshot is that it takes only 1,042 top-of-rack switches to wire up those 100,000 servers instead of 3,572 switches with the 40 Gb/sec devices.

The same logic that applies to cloud builders and hyperscale datacenter operators will apply to many supercomputing centers. If they need more bandwidth than 10 Gb/sec but don't need to move to 40 Gb/sec yet on the server side, then 25 Gb/sec networking will allow for higher network density which in turn will enable higher server density at the rack level. The wonder, having seen this math, is why 25 Gb/sec was not already an option once 100 Gb/sec technology had matured. But these things take time.

In fact, Nowell expects it to take around 18 months for a completed 25G standard. Given that much of the technology for 25G is being recycled from 100G, Nowell says the IEEE will be able to get to a baseline technical specification fairly quickly, but that the review of the specification will take time. "With the level of consensus that we have, we are going to be able to move this very quickly through the IEEE process," says Nowell.

This will no doubt not be fast enough for many hyperscale datacenter operators and cloud builders, and the race is on to see if Broadcom, Mellanox Technologies, or Intel will be the first to market with an ASIC supporting 25G Ethernet. Anyone who can do it in less than 18 months is sure to get some big business, and the pressure is one because it takes at least six months to design an ASIC and test it under the very best of circumstances. In this case, working backwards from an ASIC for 100 Gb/sec Ethernet is the very best of circumstances, so it will be fun to watch this move along fast.