Oracle Boosts Exadata Database Clusters With Custom Xeon E7s

For some workloads, a big eight-socket server node with a hefty memory footprint is going to support a database workload better than four two-socket nodes in a database cluster, which is why Oracle offers two different flavors of its Exadata database machine. The Exadata clusters were updated with Intel's Xeon E5 v3 processors last November and now they are getting the option of having larger nodes based on Intel's Xeon E7 chips.

The new machine is called the Exadata X4-8, and by moving to the Xeon E7 v3 processors, the database nodes in the Exadata machine have a lot more cores and main memory that can be deployed to support a single database instance. The prior machines were based on the ten-core "Westmere-EX" Xeon E7 v1 processors, which topped out at 2 TB of main memory per eight-socket node. With the new "Ivy Bridge-EX" Xeon E7 v3 processors, Intel has cranked up the memory capacity to a maximum of 6 TB for a four-socket node and 12 TB for an eight-socket node using 64 GB DDR3 memory sticks. (Remember, Intel skipped the "Sandy Bridge" generation to get the Xeon family more in synch.) Oracle could double it again using 64 GB sticks if customers needed it, but as it is this node is offering three times the memory without resorting to that very expensive 64 GB memory.

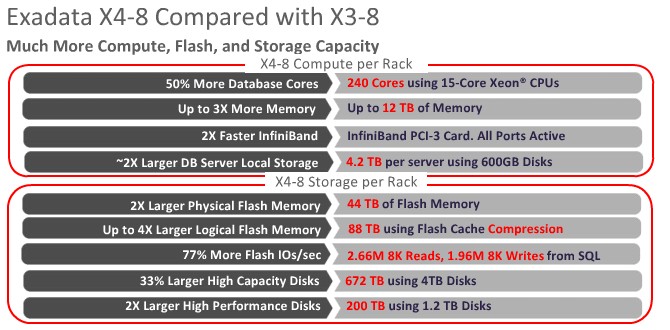

The Exadata X4-8 is based on the fifteen-core Xeon E7-8895 v2, which has a 2.8 GHz clock speed and 37.5 MB of L3 cache memory shared across the cores. The interesting bit about this chip is that Oracle has worked with Intel to get a customized version of the Xeon E7 that allows the Oracle database to scale the number of cores and clock frequency up to maximize the performance in the node and to scale it back down to conserve energy. The core count in each of the two nodes in the X4-8 rack is now 120 versus 80 with the prior generation. So that is 50 percent more compute, more or less, and up to 3X the memory and 6X if customers can convince Oracle to go with fatter sticks in an emergency.

The X4-8 database node comes in a 5U rack form factor and now has seven 10K RPM SAS disks at a 600 GB capacity for storing local applications and database files. The battery-backed write cache disk controller in the machine, which has a 512 MB capacity, that sits behind these disks has been tweaked so the battery can be replaced while the machine is running without loss of data in the cache.

Tim Shetler, vice president of product management at Oracle, tells EnterpriseTech that the new large-node Exadata machine has a few other tweaks. The eight ports of 40 Gb/sec InfiniBand coming off the server are now all active and all coming off PCI-Express 3.0 slots, so there is plenty of bandwidth coming out of each node. Shetler says that there was plenty of bandwidth already, but since the capacity is there and the node can drive it, Oracle figured it might as well turn it all on. The InfiniBand network in the Exadata machine is used to hook the two database nodes to each other and to the Exadata storage servers that store data in the hybrid columnar format that Oracle created several years ago to boost the performance and reduce the storage capacity of databases. The node has four dual-port 10 Gb/sec Ethernet controllers for linking the Exadata machine to the outside world, and ten ports running at 1 Gb/sec.

The X4-8 also has fatter PCI-Express flash cards, which come in at 80 GB a pop and deliver 44 TB of raw capacity per rack. With the flash compression that Oracle debuted with the Exadata X4-2 clusters last fall, the two nodes in a fat rack have close to 90 TB of flash capacity. The X4-2 and X4-8 machines have the same 14 Exadata storage nodes, with variations with high capacity or high performance disk drives.

"The spinning disks are not really part of the performance story anymore," says Shetler, because this flash cache largely masks the differences between fast and slow spinning disks. "All of the physical I/O to the database comes out of this flash."

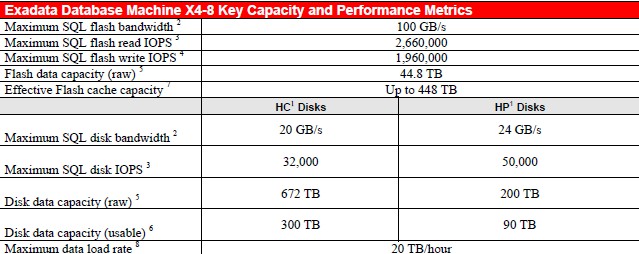

Oracle still offers disks running at different speeds in the Exadata storage servers, of course, as you can see in the table below showing the performance metrics of a rack of X4-8 iron:

The Exadata X4-2 offers customers the choice of running either Solaris or Oracle Linux on the database nodes, but the X4-8 only offers Linux. If customers want a fat memory node for Solaris, Oracle suggests they get an M Series Sparc-based NUMA machine, which scales up to 32 sockets and 32 TB of memory. Both Exadata machines can run the Oracle 11g or 12c databases, and you can link multiple racks of either machine together using Oracle's Real Application Clusters to scale out beyond one rack. Oracle RAC can scale to 16 or 32 nodes, but the typical customer scales to eight nodes using the two-socket Xeon E5 servers and has maybe two or three nodes using the eight-socket Xeon E7 server, according to Shetler. The X4-2 is appealing because it comes in sizes ranging from an eighth of a rack to a full rack. Customers buying full racks tend to building large data warehouses or consolidating large numbers of databases that used to reside on separate physical machines. Big banks, manufacturers, and retailers tend to have the largest configurations, says Shetler.

Oracle is charging a slight premium for the new Exadata data node variant. The X3-8 machine, with either the high capacity or high performance disks, cost $1.35 million with two nodes, each with 2 TB of main memory. The X4-8 machine with a base 2 TB of memory on its two nodes costs $1.5 million. The extra compute and flash easily justifies this extra cost. Those prices do not include the cost of the Oracle database, which dwarfs the pricing on the hardware at list price. At $47,500 per core for Oracle 11g or 12c Enterprise Edition would cost $11.4 million for a perpetual license when bought on a per-core basis on a rack of Exadata X4-8 machines. This does not include Oracle RAC, partitioning, or any other extensions that might be needed to run the cluster.