AWS Talks Up Enterprise Wins, Adds Zocalo Collaboration

Amazon Web Services, the cloud computing arm of the world's largest online retailer, got the first-mover advantage in cloud computing and has been aggressively innovating for more than eight years to keep its sizeable lead over larger and in some cases much richer players that are trying to get their slice of the virtual IT pie. They are going to need more than money to take on AWS. It is going to take tenacity and a whole lot of luck as well.

AWS hosts summit events a couple of times a year on the east and west coasts to introduce new services and to also showcase what customers are doing with its public cloud.

The relentless addition of new services – AWS started out with the Simple Storage Service for object storage back in March 2006, which seems like a million years ago – as well as the increasing number of enterprise customers who are willing to step forward and admit, publicly, that they are using AWS for real work, not for application development and testing, is part of the flywheel momentum that keeps AWS growing. As Werner Vogels, CTO at AWS, explained it, that flywheel motion is also set to spinning faster and faster as AWS adds customers and increases the scale of its infrastructure and the breadth of its services, which creates a positive feedback loop that brings in more customers. Having 44 price cuts on services since AWS debuted hasn't hurt, either.

But there is more to it than just getting progressively more sophisticated services at lower and lower costs. "Agility now becomes the big buzzword," says Vogel. "Every organization we talk to asks us: Please help us become more agile. The basis of that is a very agile resource model. We have to look at why agility is such a problem, and we have to take a step back. There are bigger economic challenges happening that drive these new resource models. There is an abundance of products in the market, there is increasing competition, there is increasing power of consumers to choose what they want. And there is decreasing brand loyalty. Maybe ten years ago you were absolutely certain you could sell the next generation of your product to exactly the same customers that you sold the previous generation to, that is no longer the case. That guarantee is out of the window. Combine that with a lack of capital, and there is great uncertainty in the market, whether or not your products are going to be successful. In a world with limited capital, you need new resource models."

The interesting bit, explained Vogels, is that these flexible resource models have nothing to do with IT itself – this is the same way we have hired and laid off factory workers since the beginning of the Industrial Revolution. "In every part of the business, we have these very flexible resource models already – except in IT. And so the success of cloud and AWS is mostly driven because we have given you the resource model that is exactly what the economy needs and what businesses need right now instead of just being the next technology step. If cloud was the next step of technology from mainframe to mini to client/server to Web to cloud, it wouldn’t be this big. The impact that it has on businesses, to enable them to move faster, drives the success of cloud."

What Vogels did not say, but which is certainly true, is that customers will pay a premium for raw capacity to get that agility over and above what they might deploy inside of their own datacenters. Enterprises have always been willing to pay a premium for good technology – this is why mainframes or vector supercomputers persist for certain kinds of work.

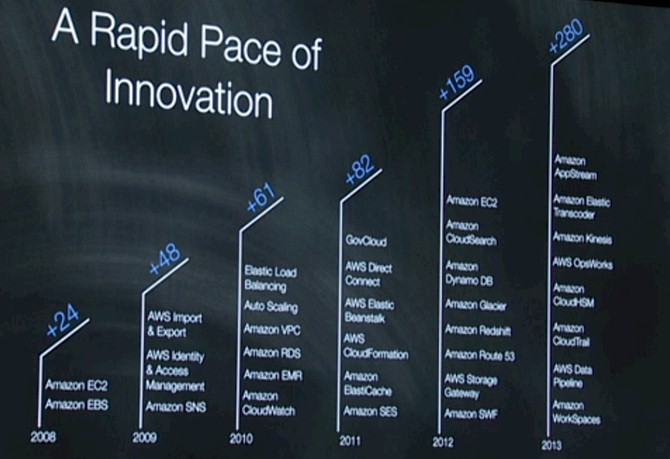

The ever-broadening portfolio of services on the AWS cloud is certainly adding momentum to that flywheel. Here is what the pace of change has been in the past six years on AWS, and as you can see, it is accelerating linearly:

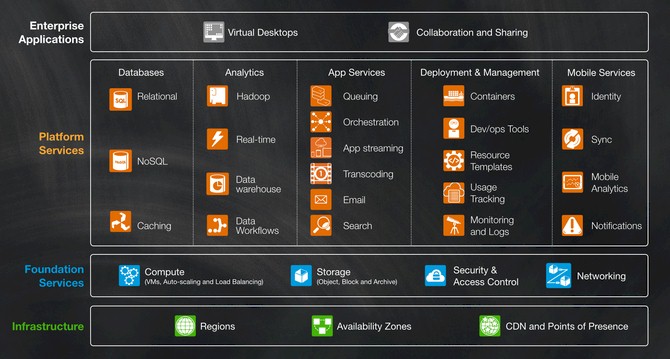

It is only June, and AWS has already put out nearly 200 new services or feature improvements, well under way to outpace the advancement. And with today's announcement of the Zocalo document management service aimed straight at enterprises, here is what the AWS stack looks like, conceptually:

The interesting thing about today's announcements from AWS is that they are targeted at enterprises, but not at raw compute but higher up the stack. The Zocalo service, which runs atop of S3, is a document management and collaboration service that will allow people to share documents, alter them, and comment on them from PCs, tablets, smartphones, and other devices. The Zocalo service links into Active Directory and other authentication services, so users don't have to manage a separate set of passwords to use it, and users set sharing policies for documents within the service. Moreover, companies can set policies above where the data can – and cannot – be replicated to within Amazon regions, which makes the auditors happy, and there is a set of logging functions that keep track of every document touch and every activity of users on the Zocalo service. Zocalo is in limited preview starting today, and will cost $5 per user per month with the first 200 GB of storage free. There is a 30 day trial right now that is available for up to 50 users; if you are using the Workspaces virtual desktop service that was announced last year and which started shipping this year, Zocalo will be included.

Another useful service announced today by AWS is Logs for CloudWatch, which will automatically aggregate and monitor log files from AWS services and applications and dump them into the Kinesis, the real-time stream processing service that Amazon announced earlier this year. Kinesis can scale to millions of records per second and take in data from hundreds to thousands of sources. The log file data is replicated to three availability zones and the first 5 GB of data of the Logs for CloudWatch service us free for both ingesting and archiving data. The first ten metrics, first ten alarms, and first million API requests are free. After that, you pay 50 cents per GB per month for ingestion and 3 cents per GB per month for archiving.

One other small tweak to the AWS cloud is that SSD-based instances of the Elastic Block Storage (EBS) service, which can provide burst data rates of up to 3,000 I/O operations per second, are now the default for the EC2 compute service.

AWS also rolled out a slew of services, including analytics and a software development kit, to make it easier for companies to deliver applications for mobile devices. This doesn't matter much in terms of accelerating applications, but it matters to enterprises who are looking to simplify the process by which they create applications. This is a big deal for enterprises, but is not, strictly speaking, about giving customers access to massive compute on a tight budget.

During his keynote, Vogels had Joe Simon, the CTO at publisher Conde Nast, come out and talk about its wholesale move to the AWS cloud, and he rolled a moving that showed the company removing slews of racks of Sun Microsystems servers from the racks and putting a For Sale sign out in front of its Newark, Delaware datacenter. Saman Michael Far, senior vice president of technology for the Financial Industry Regulatory Authority was moving its fraud detection applications to AWS, building a system that takes in 30 billion market events every trading day and using S3 to store all of the market data coming out of NYSE, NASDAQ, and other exchanges and using Hadoop and NoSQL datastores to chew on that data to look for patterns of illegal behavior.

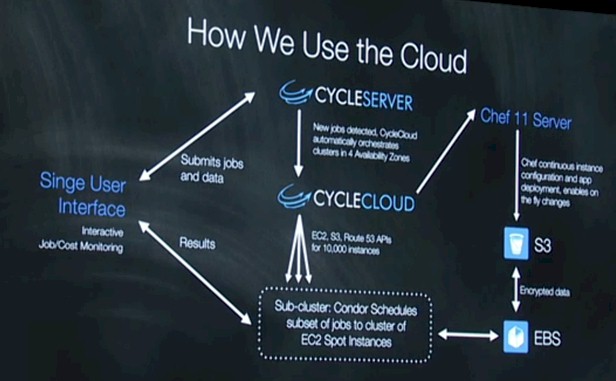

The most interesting presentation came from Steve Litster, global head of scientific computing at pharmaceutical company Novartis. Specifically, Litster works for the Novartis Institutes for Biomedical Research, which does drug discovery for cancer as well as for infectious, cardiovascular, respiratory, gastrointestinal, metabolic, and musculoskeletal diseases. (That would seem to cover most of them.) It takes about a decade and $1 billion to bring a drug successfully to market, so anything that can cut down that time or cost – or both – is desperately sought after. To give the cloud a whirl, Novartis wanted to test 10 million different compounds against a molecular binding site that might be helpful in cancer treatment, and the goal was to go it for under $10,000 on the Amazon cloud. Novartis partnered with Cycle Computing, whose CycleServer and CycleCloud tools are commonly used to fire up clusters on AWS to do simulation and modeling work.

Novartis estimated that to run the simulation of 10 million compounds would have taken a cluster with at least 50,000 cores, which the company did not have at its disposal, and it would take on the order of $40 million to build such a machine. So instead of asking for all that money, Novartis ran had 10,600 EC2 instances on AWS, with a total of 87,000 cores, and screen 10 million compounds in 11 hours for a total cost of $4,232. For that small sum of money, Novartis was able to identify three promising compounds for cancer treatment, and now it wants to test millions of compounds. And, Novartis is looking at how it might move its imaging, genetic sequencing, and other chemical modeling workloads into the AWS cloud.