Costs Come Down So Equinix Weaves 100GbE Switches Into Datacenters

Everybody wants the fastest network they can get, but speed increases or latency reductions are usually not immediately affordable to all but the extreme cases. It takes time for a new technology to ramp, volumes to build, technology to improve, and costs to come down. So it has been with 100 Gb/sec Ethernet switching. But Equinix, which hosts some of the largest enterprises and clouds in the world in its datacenters, says the time is now right for 100 Gb/sec and is starting its roll out.

“The 100GE technology has been in use and has been evolving for a number of years now,” explains Ihab Tarazi, CTO at Equinix. “A lot of our customers have already deployed 100GE in their internal networks, so now it is a question of when do they need it for external interfaces.”

Equinix has over 100 datacenters that it operates on a global basis, with over 4,500 customers and over 128,000 connections between these companies and the nearly 1,000 network providers who pump data in and out of the Equinix datacenters, constituting a major part of the Internet traffic in the world. The Equinix facilities are home to more than 1,000 clouds as well as major hubs for financial services trading applications. As Tarazi puts it, Equinix is focused first and foremost on providing co-location space and power, but because so many network providers and clouds are run from its datacenters, making fast links between these services is another benefit of locating in an Equinix facility.

“We are the most connected datacenter company out there, with rich, diverse ecosystems in all of our datacenters,” Tarazi says. “This is what customers really value when they come to our datacenters besides the infrastructure we provide.”

Equinix offers two different styles of peering services for companies and networks running inside of its datacenters. If there is a massive amount of traffic between two different end points – say two networks or a cloud and an enterprise customer – then Equinix can just provide a fiber optic link between those two end points and hook them directly. The other option is a use more traditional IP switching, and this is, in fact, where Equinix is deploying 7500E modular switches with a new series of 100 Gb/sec line cards from Arista Networks.

Up until now, if companies or networks wanted to link over the Equinix IP network, they had a few options. Equinix dabbled a bit with 40 Gb/sec switches, but Tarazi tells EnterpriseTech that these machines did not provide the kind of price/performance that Equinix or its customers expected. For the most part, if customers wanted more bandwidth to connect inside of the facilities across the network exchange, Equinix provided 10 Gb/sec line aggregation to build a bigger pipe between two customers or networks. Equinix has used a variety of switch suppliers in the past for its 10 Gb/sec and 40 Gb/sec switches, but this time after a bakeoff, the 100 Gb/sec switch business at Equinix is going to Arista Networks.

Equinix customers are pressing for higher speeds on the network exchange without having to do line aggregation for a number of reasons.

“It gives them the ability to manage their traffic more effectively because you are not having to load balance across multiple 10GE interfaces,” explains Tarazi. “You can let the cloud peak or the network peak at whatever speed it needs because the backbone on a lot of those networks and clouds have already gone to 100GE. So if you have somebody on the network side with a 100GE backbone and somebody on the cloud side with a 100GE backbone, and they need to connect to each other or to others, it is much more effective for them to connect at the natural speed of their backbone at that point.”

While the direct fiber links are useful as well, Tarazi says that its customers are getting to the point that they need real 100 Gb/sec at the IP layer to aggregate multiple networks into a single interface.

The main barrier to adoption for 100 Gb/sec Ethernet connectivity has been the expense, but the lack of density with the switches was also a problem. “I think it is happening when it needs to happen,” Tarazi says about the timing of affordable 100 Gb/sec switching. “The prior line cards only had one port, which means moving to 100GE took up too much space in the switch. I would add that the prior optical technology was too expensive to be practical for most companies, and now with the latest modules from Arista it makes sense economically not just technically.”

That is certainly what Arista Networks believes, too, with its latest line cards for its top-end 7500E modular switches.

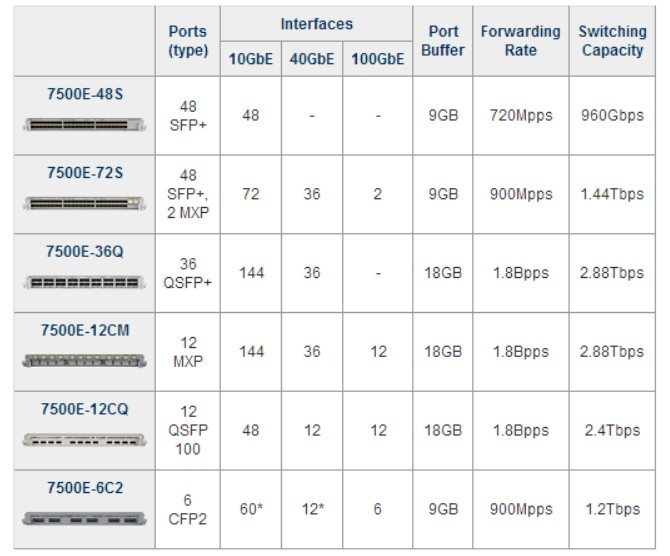

The 7500E modular switches are based on the “Dune” ASICs from Broadcom, and the top-end 7508E has eight line cards and 30 Tb/sec of switching capacity in an 11U chassis while the 7504E has room for four line cards with a total of 15 Tb/sec of switching capacity in its 7U enclosure. Arista has a mix of 10 Gb/sec, 40 Gb/sec, and 100 Gb/sec line cards for the switches, as you can see here:

The top-end 7508E can have up to 48 100 Gb/sec ports using the new six-port line card, or 288 40 Gb/sec ports or 1,152 10 Gb/sec ports. The existing 7500E-12CM line card has a dozen MXP multi-speed ports and used special MXP cables and had the optics built into the line card. The two new line cards use new optical transceivers that are less expensive than this approach, explains Martin Hull, senior product line manager at Arista Networks. The new line cards also support longer distances than the existing card, and Hull says that the company believes that 100 Gb/sec Ethernet will not be adopted until port density and fiber length are both increased, and moreover, that companies will not deploy 100 Gb/sec switches until advanced features in the top-of-rack switches are in the modular machines and the cost of a 100 Gb/sec port is the same or lower than ten 10 Gb/sec ports.

The 7500E-6C2-LC line card has six ports and uses a new hot-pluggable CFP2 port to achieve that density. It is half the size of the prior CFP transceiver and supports multi-mode and single-mode fiber optic cables. The CFP2 transceiver also consumes less power that the original CFP transceiver, which is a requirement in a lot of datacenters – particularly co-location facilities like those run by Equinix. This is, in fact, the line card that Equinix will be using. The company has 7508E modular switches in house and us doing early testing on the new line card and software and expects to have it all up and running in its network exchange by the end of the second quarter. This is when Arista Networks expects to have the six-port CFP2 line card generally available.

The 7500E-12CQ-LC card uses a QSFP-100G transceiver that offers the lowest cost and the highest density among its 100 Gb/sec line cards. This card will have a dozen 100 Gb/sec ports, and the transceiver and switch combined only burn 3.5 watts per port. This line card will be available in the fourth quarter.

You might be thinking that with 10 Gb/sec ports just becoming more common on servers, that the demand for 100 Gb/sec switches would be fairly limited at the moment. And you would be right, but that is changing fast as the economics and technologies are shifting.

“Larger scale datacenters where they have large numbers of servers are pushing traffic patterns where 100GE starts to make sense,” says Hull. “I would love to be able to say that the smaller customers in the world are needing 100GE already, but it is a volume-driven requirement. Service providers have been installing 100GE since it has been available on routers because they need big pipes. In the datacenter, we are going to see adoption of 40GE and 100GE in the aggregation layer, where they have multiple paths and multiple zones. It is where multiple 10GE or 40GE ports could be used, but 100GE is more efficient and cost effective.”

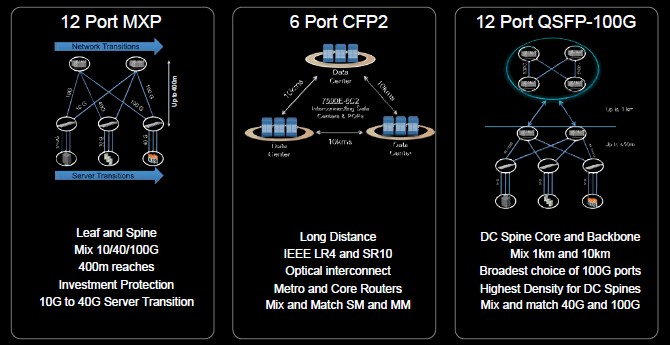

Here are the scenarios that Hull says make sense for 100 Gb/sec Ethernet in the datacenter right now:

Obviously, you can mix and match uses within one modular switch.

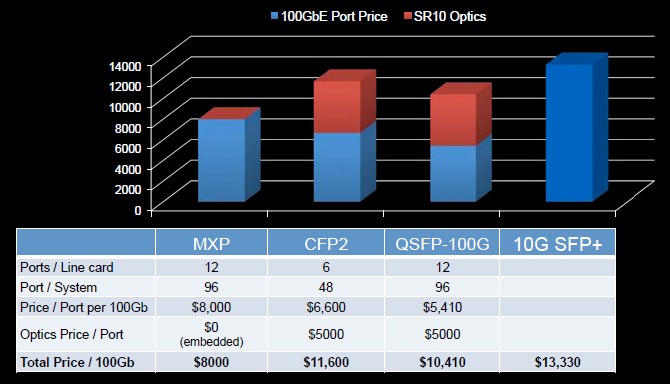

While the feeds and speeds of the new line cards are interesting, the pricing is what is going to make 100 Gb/sec take off, just like Tarazi said in reference to Equinix. Here is how Arista Networks is pricing the 7500E switches with the new line cards:

In the chart above, the cost of the various 100 Gb/sec line cards is compared to ten 10 Gb/sec ports in the same 7500E switch. These prices include the chassis, the line cards, and the optics where they are separate. These are list prices, of course, and it is not uncommon for the price to be cut in half on the street, especially when companies bring in multiple vendors on the deal.

The one other interesting thing that Arista Networks has done with the modular switches is bring some of the features on its top-of-rack switches over to the 7500E. Specifically, the 7500E now supports VMware’s VXLAN Layer 2 overlay for Layer 3 networks, which allows for a collection of networks to be managed as one giant virtual network and, more importantly, allows virtual machines in one network to be live migrated to another network over Layer 3. VXLAN also allows service stitching and can extend a Layer 2 network beyond the 4,096 VLAN limit in a network. Arista has also brought over its DANZ data analysis and tap aggregation management features from the top of rack to modular switches, Finally, the 7500E now has a simple spine upgrade feature, which allows for a modular switch being used as a spine in a leaf-spine network to be gradually taken out of the network path so it can be serviced without taking the network down.