LinkedIn Copes with Server Explosion with Revved Up CFEngine

The dream of every Internet startup is for an idea to take off and go mainstream. This is precisely the nightmare of every system administrator that works at these fast-growing companies. Luckily, most of them are adept at using and writing software, and if they need to tweak a product to make it work better in their large-scale environments, they have the skills to do so. Such is the case with business networking site LinkedIn and the CFEngine systems management tool.

CFEngine is now twenty years old, and it has come a long way since Mark Burgess, a post-doctoral fellow of the Royal Society at Oslo University, cobbled it together to manage a cluster of workstations in the Department of Theoretical Physics. More than 10 million servers in the world are managed by CFEngine today, which is a significant portion of all of the servers installed – something on the order of a quarter of all machines.

The popularity of CFEngine is due in part to the fact that it was open sourced under a GNU Public License, and has gone through three major release upgrades in that time with new features added. Burgess established a company bearing the CFEngine name in 2008, the same year CFEngine 3 was launched, to offer commercial-grade support for the system management tool to enterprise customers. In 2011, CFEngine secured $5.5m in Series A financing from Ferd Capital and moved its headquarters to Palo Alto, California to build up its business.

CFEngine uses an open-core distribution model, where the core product is distributed as open source but the extra goodies that enterprises want – and will pay for – come with license fees in the support contract. The latest release of the tool, CFEngine 3.5, came out in July and can manage 5,000 servers in a single policy engine instance.

By contrast, Hewlett-Packard's new OneView system and configuration management tool, developed in conjunction with PayPal, eBay, Intuit, Bank of America, and JPMorgan Chase and launched in September, only scales to 640 servers and their related switches and storage arrays. HP is promising to scale this up significantly.

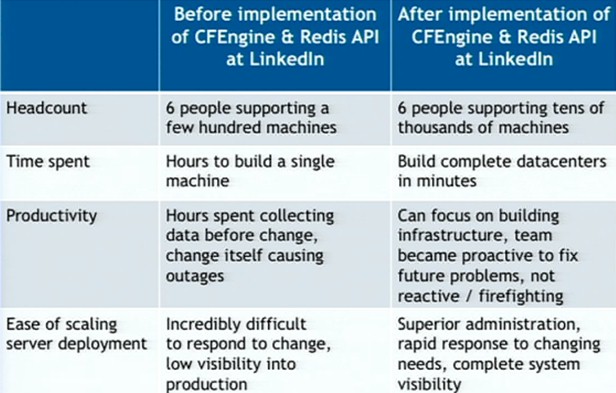

The scale of CFEninge was not sufficient for LinkedIn, explained Mike Svoboda, staff systems and automation engineer, who gave a presentation at the Large Installation System Administration conference in Washington, DC this week. Or more precisely, the scale might have been sufficient at first, but then LinkedIn learned that it didn't just need a configuration management system. It also needed a back-end telemetry system to keep CFEngine well fed and a means of querying the vast amount of systems data it wanted to store to run its operations better.

Those operations are now extreme scale, and growing fast. Three years ago, when Svoboda joined LinkedIn, the company had a very manageable 300 servers, and system administrators could use SSH or DSH (the distributed SSH) secure shells to log into servers and configure them. DSH could scale to maybe 80 to 100 servers, but the problem with this distributed manual approach is that you were never sure if the changes you broadcast to the systems actually were done. And so LinkedIn installed CFEngine to automate the management of its couple of racks of servers.

LinkedIn chose CFEngine because it was written in C and did not have any dependencies on Ruby, Python, Perl, and other languages, and also because it didn't consume a lot of resources and had no known security vulnerabilities. And that worked for a while. Then LinkedIn's popularity started growing by leaps and bounds, and today the company has more than 30,000 servers across its multiple datacenters, keeping track of what you are claiming to be doing at work.

"Everything at LinkedIn is completely automated," said Svoboda. "What we are starting to find is that we are no longer logging into machines to manage them. I come across machines that we have had in production for four to six months and it literally has two logins, and that was me when I built them machine. We have taken all aspects of CFEngine – configuring the OS, monitoring the hardware for failure, account administration, privilege escalation, software deployment, system monitoring – for literally everything that LinkedIn operations does to automate. One really good benefit of this is that we can build datacenters really fast. In some organizations, it may take them months of planning, but we can literally stand up a new datacenter in hours if not minutes."

But it has taken the company more than a year to get there. The problem that LinkedIn's IT staff was facing is that it needed to make somewhere between 10 and 15 changes across its server fleet every day, and at best it could do maybe one or two with the raw CFEngine using a semi-automated process. System changes were automated, but figuring out what systems to change was still heavily manual. LinkedIn had two problems. First, it wanted to be able to ask any question it could think of about its production clusters, and second, it didn't want to break the production environment by pushing changes to the systems.

"This is a very scary thing for people using configuration management for the first time," said Svoboda. "We can enforce system state, but it is very difficult to answer questions about tens or hundreds of thousands of machines. Automation doesn't give us visibility, but it gives us the tools to build it. As our scale and size increase, getting visibility becomes a more difficult problem."

So, for instance, it is very tough to find out what level on an operating system is installed across the server fleet. Or, maybe you want to know the configuration of hardware on all of the Web servers. Or maybe someone wants to know what machines are attached to a particular switch. And on and on and on. CFEngine can't do that easily across a fleet of machines, and neither can a staff of human beings banging away at SSH screens. These kinds of questions are important, especially when you are pushing out system changes and you want to make sure you are not going to break anything, but it took too much time to get answers.

"Data collection should not be complicated, and I should not have to spend hours of my day trying to make sure I am not going to break things before I make a change," Svoboda said. That means knowing where the exceptions are – whether they intentional because of differences in the applications or hardware, or unintentional because some system admin went rogue and made a change without using CFEngine. (Yes, this is frowned upon mightily.)

"No matter what automation product you use – CFEngine, Puppet, Chef, Salt, and do on – they all have the same common problem. Automation assumes that you know what you are going to do to your machines, and a lot of times, administrators don't know. You can write a policy that spans 30,000 machines, but if you don't really know, you can break things and cause outages."

What LinkedIn decided to lash a NoSQL data store to CFEngine. Both Memcached and Redis were evaluated, and because Redis already had some key commands that Memcached lacked and that LinkedIn would have to build themselves, Redis was chosen.

Once a day, the CFEngine policy engines across the LinkedIn datacenters query all of the servers in the fleet for pertinent data about their state. This data is fed up to a cluster of four Redis servers, which keeps all of this data in memory. There is a sophisticated round robin load balancer for both the CFEngine servers and the Redis servers to keep this process from bottlenecking. In the end, all of the state information for all 30,000 servers is pulled into the Redis key-value store once a day, and comparisons across that fleet can be done with simple queries. The data is sharded and replicated across each of those four servers so that queries can be run against any Redis node that is not busy at any given time.

To cut down on network bandwidth to and increase the amount of data that could be stored in the Redis cache in main memory, LinkedIn decided to use data compression, and after looking at several compression algorithms – lz4, lzma, zlib, and bz2 – it went with bz2 which offered the best combination of low CPU overhead and reasonably data compression.

Svoboda showed queries where he looked on the LinkedIn fleet for his own user account, and on a local cage of racks in a datacenter, the system could tell him how many machines he had root access to (487 as it turns out) in 1.5 seconds. Across an entire datacenter, his account was active on 8,687 machines and that query took 30.3 seconds to complete, and across the global fleet, Svoboda's account was on 27,344 machines and that query took only 1 minute and 39 seconds to run to find that out.

It is hard to imagine how long it would take to ask the same questions manually using SSH.