Oracle Lifts The Veil On Big Memory Sparc M6-32 System

The word on the street ahead of the Oracle OpenWorld extravaganza, which opened on Sunday evening in San Francisco, was that CEO Larry Ellison would take the stage and talk about the company's biggest and baddest server to date along with in-memory extensions for the Oracle 12c database. Those rumors turned out to be spot on.

The word on the street ahead of the Oracle OpenWorld extravaganza, which opened on Sunday evening in San Francisco, was that CEO Larry Ellison would take the stage and talk about the company's biggest and baddest server to date along with in-memory extensions for the Oracle 12c database. Those rumors turned out to be spot on.

The precise feeds and speeds of the "Big Memory Machine," as Ellison called the Sparc M6-32 system, were not available as EnterpriseTech, went to press, but we will hunt them down. In the meantime, let's go over what we know from Ellison's keynote address.

The Sparc M6-32 is based on the twelve-core Sparc M6 processor, the second of the high-end Sparc processors that Oracle has launched since it took over Sun Microsystems in early 2010. The M6 has twice as many cores as the M5 processor it replaces; both chips are based on the "S3" core design that has also been used in the prior two generations of the Sparc T series chips aimed at entry and midrange servers.

The T4 and T5 have more cores and less L3 cache memory, while the M5 and M6 have fewer cores, have a lot more L3 cache (48 MB in this case), and are aimed at massively scaled, shared memory systems rather than bitty boxes. Each M6 core has eight threads, which means a single socket in the box has 96 individual threads for processing. On Java, database, and other parallel workloads that like threads, these chips will offer decent performance. It is not clear how well they will do on single-threaded jobs. Oracle has not yet divulged the clock speeds on the Sparc M6 processors, but the M5 chips, which were implemented in the same 28 nanometer chip making processes as the M6, ran at 3 GHz.

The M6-32 system has a whopping 1,024 memory sticks in its chassis. That works out to 32 TB of main memory in a single system image with 384 cores and 3,072 threads. Ellison said that the 384-port interconnect, code-named "Bixby" and invented by Oracle, has 3 TB/sec of bandwidth linking the system together in a 32-way setup. The M6 chips are linked in groups of four into a single image using interconnect ports on the chips (much like a symmetric multiprocessing server), with the Bixby interconnect then hooking together multiple four-socket nodes into a single system with non-uniform memory access (NUMA) clustering. The M6-32 has 32 sockets can scale all the way up to 96 sockets. Ellison said that the M6-32 had 1.4 TB/sec of memory bandwidth and 1 TB/sec of I/O bandwidth.

"This thing moves data very fast and it processes data very fast," bragged Ellison.

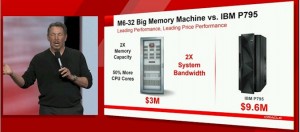

As he often does when he launches new systems, Ellison made a point of comparing his box to the largest IBM Power Systems machine, in this case the 32-socket, 256-core Power 795 server.

Presumably these are like-for-like comparisons, but without the details, it is hard to say how fair the comparison Ellison made above is.

In addition to being offered as a standalone server, the M6-32 will also be available as a so-called SuperCluster configuration, with Exadata storage servers (for speeding up database processing) hooking into the machine through a 40 Gb/sec InfiniBand network.

The Sparc M6-32 is available now and runs the Solaris 11 version of Unix. The chart above suggests that a 32-socket, 32 TB configuration should cost around $3 million.

While this machine is not limited to database processing, given the large memory footprint and the number of threads it can bring to bear, the M6-32 was certainly designed for database processing. And, specifically, is well matched to the new in-memory extensions to the Oracle 12c database that Ellison also previewed during his OpenWorld keynote.

These new in-memory database extensions created by Oracle are clever. Traditional databases store data in rows and index them for fast searching and online transaction processing. Newer databases (including the Exadata storage servers) use a columnar data store plus compression to radically speed up analytics pre-processing that is then fed up to the database.

With the new in-memory extensions, Oracle is now storing data in both row and column format – at the same time – and updating each to maintain transactional consistence across the formats. This puts overhead on the databases, as Ellison explained, but now because analytical indexes no longer need to be maintained and the columnar store is running in memory, there is less work for the database to do as information changes. (It doesn't have to update all of the OLTP and analytical indexes.) And thus, rows can be inserted somewhere between three and four times faster, transaction processing rates increase by a factor of two, and queries against that native columnar store that is residing in main memory can process queries at least 100 times as fast as the Oracle 12c database in a row format running off disk drives.