Google Uses PUE Properly in POP Optimization

The constant chatter around PUE (Power Usage Efficiency) is itself often criticized because institutions wish to tell a whole story based on a metric that is meant to provide only a snapshot. When PUE was introduced by The Green Grid a few years ago, it was meant to serve as a point of comparison within data centers and facilities instead of among them.

Thus, it is refreshing when Google releases a case study where the measurement of PUE is utilized in the actual manner for which it was intended. Specifically, they measured, ran models, and optimized cooling procedures for several of their “Points of Presence,” or POPs, which are equivalent to small-to-medium sized data centers.

Thus, it is refreshing when Google releases a case study where the measurement of PUE is utilized in the actual manner for which it was intended. Specifically, they measured, ran models, and optimized cooling procedures for several of their “Points of Presence,” or POPs, which are equivalent to small-to-medium sized data centers.

To measure PUE, one must take the total facility power usage and divide by the power usage of the IT equipment. The excess tends to come mostly from cooling the machines. Lighting also plays a role in large enough facilities but the significant portion of the Google case study focused on maximizing cooling efficiency.

Google started by doing what The Green Grid recommends in measuring PUE: placing sensors everywhere. A campaign to improve one’s energy efficiency within the data center won’t go very far without an intense data collection effort. Further, in addition to measuring temperature, Google wanted to study how airflow impacted the server aisle temperatures.

Once improved measurement systems were in place, Google continued by challenging the temperatures at which it is generally thought IT equipment must run. Cooling in general is an expensive proposition for a company that sees as much data come and go as Google. Unfortunately, common sense dictates that the machines must run between 15 and 21 degrees Celsius.

However, according to the American Society of Heating, Refrigerating and Air Conditioning Engineers, cold aisles (aisles that directly receive cool air from the air conditioning system) should function at 27 degrees Celsius. Further, Google noted that “most IT equipment manufacturers spec machines at 32°C/90°F or higher, so there is plenty of margin.”

An overview of a sample facility shows that the cold aisles mentioned above are where the computer room air conditioner (CRAC) pumps in cool air and hot aisles exist where the CRACs collect the exhaust.

According to Google, many CRACs also act as dehumidifiers, reducing the relative humidity to 40 percent and reheating air if the air is too cold. Eschewing these seemingly unnecessary (or at least inefficient) processes can produce some savings off the bat.

“The simple act of raising the temperature from 22°C/72°F to 27°C/81°F in a single 200kW networking room could save tens of thousands of dollars annually in energy costs.”

When Google started this project, the average PUE in their POPs was 2.4, mainly because the cooling system was designed to support a much heavier IT load than was actually utilized. “The IT load for the room was only 85kW at the time, yet it was designed to hold 250kW of computer and network equipment. There were no attempts to optimize air flow. In this configuration, the room was overpowered, overcooled and underused.”

The diagram below represents an initial inefficiency: the cool air is flowing over the servers straight to the exhaust ports. The ambient air will be affected and the servers will cool but not as quick as they should.

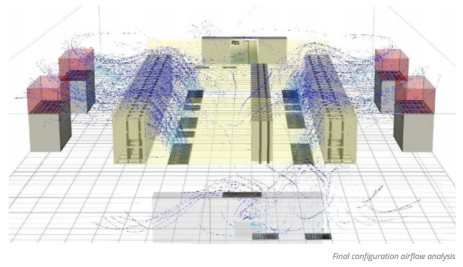

After their sensors collected a significant amount of temperature and airflow information, they ran computational fluid dynamics simulations to determine how best to limit the cool air to the servers. They came up with plastic barriers that would enclose the cool aisles along with running refrigerator curtains along the length to regulate and direct airflow. The result can be seen below.

These adjustments accompanied with the aforementioned increased thermostat settings brought the average PUE from 2.4 to 2.0.

Next was identifying hotspots that could be brought to a more normal temperature in an efficient manner. Before the adjustments were made but after information was collected, Google had this map that showed abnormal levels of temperature near, unsurprisingly, exhaust ports.

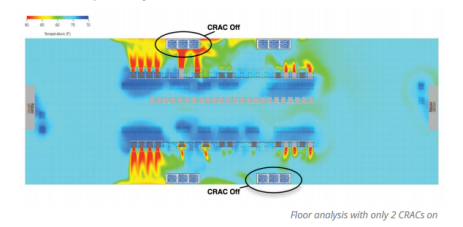

The solution, according to Google, was apparently rather simple. They installed 48-inch sheet metal boxes around the CRAC intake grates that improved air flow and leveled return air across the CRAC. These adjustments also allowed Google to turn off two high-power consuming CRACs, as shown in the diagram below.

Once those adjustments along with simple measures such as automatically turning the lights off when the building is unoccupied, the PUE fell to 1.7.

“With these optimizations, we reduced the number of CRACs from 4 to 2 while maintaining our desired cold aisle temperature. If you compare our floor analysis at this point with our initial floor analysis, you’ll notice that the cold aisle is cooler and the warm aisle is about the same—this is with the thermostat turned up and only half the number of air conditioners turned on,” it was noted in the research.

Now, a PUE of 1.7 in and of itself does not sound particularly impressive considering the 1.3-and-lower estimates one hears from designers of new data centers. However, remembering that PUE is a tool for measuring one against past versions of oneself, Google executed the measurement of the metric admirably.

Further, for those institutions looking to keep costs down, the overall investment required on average to make these adjustments was $25,000. The power savings alone totaled $67,000 per year.

So while PUE is wildly overused, we have here an example of an institution using PUE’s initial principles such as intense self-measurement, to accomplish a bona fide uptick in energy efficiency. Google, incidentally, happened to also save themselves some money in the process.

Related Articles

Apple Makes Peace with Greenpeace

Google Adapts its Ten Rules to Datacenter Building

A New Tool for Building Energy Efficient Servers