Does Green Sacrifice Performance for Efficiency?

There are a lot of details around the battle to implement energy efficient high performance computers but when you slog through all those mentions of UPS’s, ARM chips, air and water cooling systems, you get down to one central question: to what extent should performance be sacrificed for efficiency’s sake?

Three researchers at the Walchand College of Engineering in Sangli, India tackled this problem in a study detailing energy awareness in high performance computing.

Three researchers at the Walchand College of Engineering in Sangli, India tackled this problem in a study detailing energy awareness in high performance computing.

Regarding implementing energy efficiency into high performance computers, there will almost certainly exist that tradeoff between efficiency and performance. The key, for those who wish to or need to explore that, is to ensure that the lowest amount of performance is wasted.

A significant portion of the research focused on fostering energy requirement self-awareness for the HPC systems. To do that, those facilities will need to implement devices that track the amount of energy used in specific areas. Luckily, many data centers are designed to do this, both for maintenance purposes and for determining metrics such as PUE, important as they would likely be interested in higher energy efficiency in the first place.

On that note, the group identified three areas where measurements can be taken and automatic adjustments made. Falling under the umbrella of Adaptive Systems Management, those three areas include hardware reconfiguration, resource consolidation, and programming language support, as shown in the figure below.

“System hardware can be reconfigured to low power states as per power requirements of the situation,” the study notes. Again this implies a self-aware system that relies on likely some software to regulate machine idle times. “This also gives benefit of minimum power on time since its quick to change states than to reboot the system,” the team concluded.

Resource consolidation represents more or less a way of thinking about how one can complete their workload using fewer servers, for example. The online bidding company eBay applied this principle and found some creative coding ways to eliminate hundreds of servers from being necessary.

Such code fixes aren’t always found, however, and the team argues that an institution may need to find similarly creative and complex solutions. “If multiple under-utilized resources are found then we try to consolidate their work on fewer numbers of resources and shutting down free resources. This may lead to complex configuration of data and applications in order to support the consolidation decisions,” they noted. “Dynamic check pointing can be the solution with overhead of memory and frequent updates to check point.”

Incidentally, as noted in the eBay example, a decent amount of that resource consolidation can be found through their third point, programming language support. Specifically, HPC applications are often built with no mind toward energy efficiency. Job schedulers act for maximum performance, eschewing energy concerns. This makes sense as often those job schedulers are not frequently fed energy data.

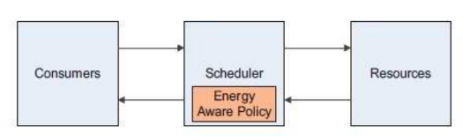

Building an “Energy Aware Policy” component to the scheduler would both raise demand for such data and then force the computations to flow in a more green-friendly manner. “For example,” the researchers said “sequence matching tools in bioinformatics can be configured to use resources with power constraints.”

This then would imply that the energy efficient way is not the natural way, meaning there must be some loss in performance. So begs the question, how much of that performance is lost and is it worth it?

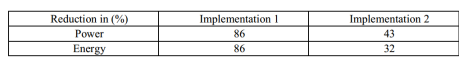

The researchers answer the first question by testing two green systems, with different applications regulating 8-node systems at both the network level and the operating system/cycle server level, with the results listed below. Implementation one refers to the network application while implementation refers to that for the network.

Clearly, their application that adjusted the network according to power and energy needs outperformed the server application. It is important to note that for both applications, the reduction in performance was just below 20 percent.

That 20 percent loss in performance may be deemed unacceptable for some institutions. However, for those with the ability or willingness to feed energy data into such an application, that 20 percent loss in performance comes with, reportedly, an 86 percent loss in power requirement.

Part of the pushback on heading toward something like an exascale system involves the power requirements of such a system. Research like the study profiled here could pave the way for a large system that remains sustainable.

Related Articles

Pfeiffer Dishes on Data Center Metrics and Trends

Heat-Trapping and NREL's Green Datacenter Leadership Effort

The Green Grid Forum 2013 Tackles Metrics, Standards