Goldman Sachs And Fidelity Bank On Open Compute

What started out as a desire by Facebook to control its own infrastructure five years ago and evolved into the Open Compute Project to open source hardware and datacenter designs is transforming the way infrastructure is designed and manufactured in the IT industry. Nothing could make this more clear than the fact that financial services powerhouses Goldman Sachs and Fidelity Investments have been early and enthusiastic supporters of open source hardware and are working to deploy this technology in their own datacenters.

Just like supercomputing facilities have put downward pressure on prices and have compelled a relative handful of IT vendors to make very specific machines to suit their needs, these hyperscale customers want both flexibility and customization in system designs. They are no longer willing to make do with what the makers of general-purpose servers, storage, and networking gear provide, and they are similarly unhappy with the two to three year cadence that vendors have to build new products.

They also want to spend a lot less money on their infrastructure and its operations.

At the Open Compute Summit in San Jose this week, Jay Parikh, vice president of infrastructure at Facebook, which founded the Open Compute Project three and a half years ago, said that in the past three years Facebook has saved an estimated $1.2 billion on IT infrastructure costs by doing its own designs and managing its own supply chain, and CEO Mark Zuckerberg later added that on top of this savings, Facebook has saved enough energy to power 40,000 homes for a year and reduced carbon dioxide emissions at a level that is equivalent to removing 50,000 cars from the road for a year.

To put that savings into perspective: Facebook had a combined revenue of $10.8 billion in 2010 through 2012 inclusive; net income was $1.07 billion over that period, and significantly, was only $32 million in 2012. If Facebook had not done its own server and datacenter designs, it might not be a profitable company.

This, as much as any hardware design, is going to catch the attention of corporate and government datacenters the world over.

The change to the IT supply chain that Open Compute is enabling is obviously to the benefit of hyperscale datacenter operators like Microsoft, which just joined the Open Compute Project, Facebook, and Rackspace Hosting. Component suppliers like Intel and Seagate are eager to sell to them in bulk, and the evolving ecosystem of partners that are becoming a new class of design and manufacturing partners for systems, storage, and networking are also eager to work with them. But Open Compute is also benefitting smaller organizations – in terms of their IT infrastructure, not revenue or market capitalization – like Goldman Sachs and Fidelity Investments. The expectation is that eventually the Open Compute community of users and certified vendors will be able to create enterprise-grade products that are suitable for a wider array of customers.

All of the participants in the Open Compute Project, and the many thousands more watching from the sidelines, want the same thing: to spend less money on infrastructure while at the same time getting IT gear that is more tuned for their workloads and a choice of software to run on that gear. The IT industry has been delivering systems that support multiple operating systems for years, and the project has been pushing for the past year to open up datacenter switches to give switches same kind of flexibility.

That doesn't mean the transition from traditional IT supplier relationships to the Open Compute way is an easy one.

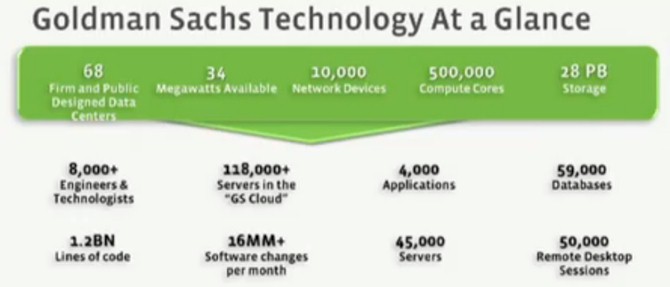

"Unlike many of the other firms that are adopting OCP hardware, we are a 150-year-old concern with an existing infrastructure," explained Grant Richard, managing director of technology infrastructure at Goldman Sachs, who gave a presentation at the Open Compute Summit in San Jose this week.

Goldman Sachs has 68 different datacenters worldwide, some of which are designed by itself and some that are designed by others. Some facilities consist of a few racks of equipment at an edge location, and others are facilities that burn 6 or 7 megawatts of juice. Add it all up, and Goldman Sachs has 34 available megawatts of electric capacity at its disposal to power and cool those aggregate datacenters.

The company has a server fleet of 118,000 machines and growing, with over 500,000 aggregate cores spread across them; it has a total of 28 PB of disk storage across a wide variety of devices. The company has a whopping 4,000 applications – absolutely typical for a big bank – that are back-ended by some 59,000 databases. Goldman Sachs supports a cornucopia of system types and operating systems for its infrastructure, which also separates it from the hyperscale datacenter operators, who tend to have their own variant of Linux a relatively modest number of applications. Goldman Sachs has around 8,000 people on the IT staff, ranging from application developers, system engineers, security experts, system administrators, and infrastructure tools coders.

The big applications at Goldman Sachs include a monster risk analysis and pricing cluster – Richard did not specify the size of this system – as well as another large cluster that provides virtual desktops to the 32,600 employees of the company. The company is also building a compute cloud as a new home for applications, which will be based on OCP hardware.

The tough issue for Goldman Sachs is all of that application code. The investment bank has about 1.2 billion lines of code spread across those applications.

"This is a fairly substantial code tree and, and some of that code is older than I am," joked Richard. "This is code that we need to move into this environment, and this is where we start."

Goldman Sachs was at the initial OCP launch, and the company was concerned that so much of the effort was about hardware and not much was being done about the management tools. So Richard chaired the hardware management effort at the project to get it rolling. The company also liked all of the designs that Facebook and then others started contributing for servers and racks, but as an operator of legacy datacenters, the triple-bay Open Rack that Facebook developed for its own use was not appropriate. Goldman Sachs, and its financial services peers, including Fidelity Investments, needed a bridge between the standard racks in use in corporate data centers. (More on that in a moment).

So several financial services firms, including Goldman Sachs and Fidelity Investments, drove the creation of two-socket motherboards for servers that fit in a standard 19-inch rack, code-named "Decathlete" for the Xeon version and "Roadrunner" for the Opteron version. These boards were code-named "Swiss Army Knife" internally, and the idea was that they would suit a variety of needs for application serving, databases, storage, high performance computing, and VDI workloads.

"We wanted to have a platform that could service all of these needs," says Richard. This is a tall order. But if there is one thing that large enterprises want – and need – it is to eliminate variety in their infrastructure wherever possible. This is how you save money. (Ask Southwest Airlines about that, since it did the same thing with its airplane fleet.) Goldman Sachs gave Intel specs for what became the Decathlete board and has subsequently stress tested the BIOS, Linux and Windows, and virtualization layers for it, much as an IT supplier would.

The other thing that Goldman Sachs needed was a brand for these machines, with certification coming from vendors and with the liability protections that are normally assumed by the tier one IT equipment makers and then back-ended by component warranties.

"Even though we tested everything, one of the challenges that we had was the supply chain," explained Richard. "How do we order things? How do we bring them in? How do we do legal contracts for liability? When you bring a server in, there are some expectations. Typically, in the old-style legacy market, all of that was hidden for us. So as customers, we had integrators, and we used the term loosely. It was anybody who supplied us with a complete unit. So this was the large, classic integrators and behind them were the manufacturers. There was a tremendous amount that was hidden from us – both good and bad. The model today is that really that the people who are putting those together for us as well as us, the customer, are in a tight partnership. And that partnership is really going to pay off."

This time next year, at the next Open Compute Summit, Richard expects for Goldman Sachs to have a "significant footprint" of OCP hardware in its datacenters.

Bridging The Gap

It may seem silly that the configuration of a rack could hold up innovation and rapid adoption of a new technology, but datacenters are designed specifically for certain sized racks. The loading docks and doors are a certain size, and in many raised floor environments there are weight constraints that limit the size of a rack as well as floor tiling schemes that were created to match a rack to the floor.

If you are Facebook and you are pouring new concrete in a remote datacenter in Oregon, North Carolina, or Sweden, you can make your rack any size you want. Although, if you fill a triplet Open Rack with disk drives, as Facebook did, you find that a 5,000 pound rack of storage servers might get away from IT personnel and almost hurt someone as it was being brought into the datacenter. This actually happened, and that's why the cold storage facility opened up late last year has a modified single-tier Open Rack. The triplet is too dangerous.

Fidelity Investments wanted its own jack-of-trades system board, and it has been the spearhead on the "Roadrunner" two-socket Opteron motherboard project at OCP. (AMD last week updated its Opteron line with CPUs specifically designed for this board and aimed precisely at financial services and cloud providers.) The investment firm was also the key designer of what is being called the Open Bridge Rack, a hybrid rack that can be setup as either a standard EIA rack with 19-inch slots for IT gear or the 21-inch slots that are needed for Open Compute systems and power shelves.

Brian Obernesser, director of datacenter architecture at Fidelity, explained that Facebook's original Open Rack had a few things that made it unsuitable for its datacenters. First, the power shelves were welded into the rack. And, they did not support standard 19-inch equipment. So Fidelity's engineers came up with a vertical rail system for the Open Rack that could be removed, rotated, and put back in the rack to support either 19-inch or 21-inch widths. This conversion process took about 75 minutes at first, but Fidelity is getting better at it and thinks it can get it down to about 45 minutes with a proper set of documentation.

The Open Bridge Rack is 48U high (that's seven feet) and can therefore hold 48 EIA units of gear or 40 Open Rack units. (The gear designed for standard Open Racks used by Facebook is taller and wider, and the idea is to allow for three server sleds that are each slightly wider than a 3.5-inch disk drive, as well as a power supply and more disks beside that, to fit side-by-side in a server enclosure, all accessible from the front.) The Open Bridge Rack has two power shelves that are removable, and 400 volt and 208 volt units will be available. Obernesser says that the rack will be able to host up to 15 kilowatts of gear, and that Fidelity is working on a further modification that will allow for telecommunications gear, which is 23 inches wide, to be railed in. The Open Bridge Rack was designed in conjunction with Intel, Goldman Sachs and power supply and rack makers Legrand, ElectroRack, Delta, and CTS. The specifications and schematics for the Open Bridge Rack have been contributed to the OCP.

Fidelity did not divulge its rollout plans for OCP gear, but EnterpriseTech knows that Fidelity is working with Hyve Solutions for at least some of its infrastructure.

OCP Momentum Is Building

The Open Compute Project has 150 members across a range of customers and suppliers. But the size of the effort is not what is important, says Frank Frankovsky, who is chairman of the organization as well as vice president of hardware design and supply chain at Facebook. What is significant is the way OCP is changing how systems get created and rolled into facilities – and who is doing it.

Last year, Avnet, one of the largest IT distributors in the world, and Hyve Solutions, the custom system unit of Synnex, another large IT distributor, were certified as OCP solution providers. This is a formal designation for a distributor/manufacturer that can take OCP designs and build to them with the OCP's blessing and guarantee of compatibility. Now, AMAX, Penguin Computing, Quanta, CTC, and Racklive have become official solution providers, widening the options and deepening the supply chain for OCP gear. Enterprise customers like to be able to have multiple sources for their equipment, and with OCP designs, they have the possibility of having multiple suppliers with compatibility across those suppliers, down to the form factor and system management layers in the systems.This is a new thing for the IT market.

"These are companies that are building their businesses from the ground up with open source communities in mind," said Frankovsky. "Instead of predefining a roadmap of products and then trying to find a problem that it fits, these are businesses that are based on consultative selling."

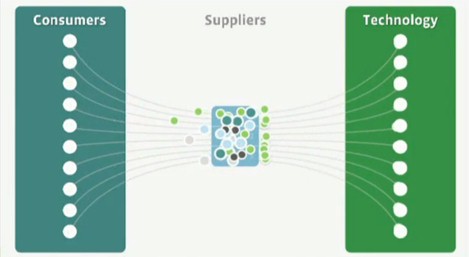

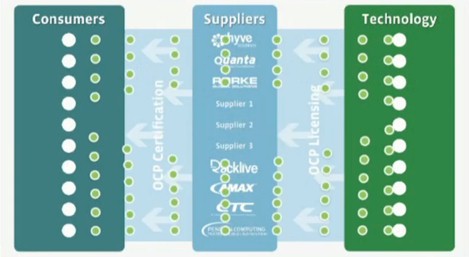

As an analogy, Frankovsky use a metaphor from the networking world. Here's what the IT supply chain looks like today:

And here is what the OCP chain looks like:

"The solution provider network plays a critical role in being able to deliver the benefits of a converged infrastructure without the proprietary bullshit that typically comes with it," Frankovsky said with calm bluntness. "No muss, no fuss. Deal with your solution providers, and get an open source solution that will be bespoke for you."

The analogy is to a non-blocking network switch, making a high-speed, low-latency interconnect between the creators of a particular technology component – a processor, a memory stick, a motherboard, a disk or flash drive, an operating system, and so on – and the ultimate consumer of those devices. The fundamental principle is that the consumer knows more about their IT shop than traditional IT vendors do, and working with parts suppliers creates a direct feedback loop between customers and suppliers.

"This is a beautiful business model," said Frankovsky. "For some odd reason, we continue to use a bottlenecked business model to support scale out computing."

Perhaps not for long, if OCP players have anything to say about it.